Technoscenti titans Elon Musk and Mark Zuckerberg have gotten into a bit of a tiff lately, with Musk repeating warnings that rapidly developing artificial intelligence (AI) poses an existential threat to humanity, and Zuckerberg countering that such concerns are much ado about not much.

During a recent Facebook Live broadcast from his backyard, Zuck said he was “really optimistic” about AI, and disparaged “naysayers… [who] try to drum up these doomsday scenarios…”

Musk responded on Twitter in a constrained but still somewhat contemptuous fashion, stating “I’ve talked to Mark about this. His understanding of the subject is limited.”

Of course, the debate over the risk of AI is hardly limited to Musk and Zuckerberg. Stephen Hawking, for example, sides with Musk, maintaining that super intelligent artificial intelligence could be a slate cleaner for humanity. Futurist Ray Kurzweil, on the other hand, is giddy at the prospect of The Singularity, that moment at which AI achieves self-awareness and soups itself up at an ever-accelerating rate, ultimately subsuming everything from your grandma to the Kuiper Belt into a gigantic hive mind—not really such a bad thing, Kurzweil implies. Besides, resistance is futile.

It’s hard for the average citizen to determine where the truth between these polarities lies, so CALIFORNIA asked two UC Berkeley AI experts for their takes.

Stuart Russell, a professor of computer science and a principal at Cal’s Center for Human-Compatible AI, thinks Musk is taking both a reasoned and reasonable approach to the issue.

“Musk’s suggestion that the U.S. government should form some kind of regulatory commission to monitor AI and keep Congress and the executive branch well-informed is mirrored by a legislative proposal from Sen. Maria Cantwell (D-Washington), hardly a wild-eyed tinfoil-hat-wearer,” Russell stated in an email. “It seems eminently sensible.”

[Zuckerberg] seems to be missing the point entirely [by maintaining] that if you’re arguing against AI you’re arguing against safer cars that aren’t going to have accidents,”

Otherwise, Russell continues facetiously, “…The U.S. could replicate the EU’s debate on enshrining Asimov’s Three Laws of Robotics in legislation.”

Zuckerberg, on the other hand, “…seems to be missing the point entirely [by maintaining] that if you’re arguing against AI you’re arguing against safer cars that aren’t going to have accidents,” says Russell. “First, Musk isn’t against AI. After all, he funds a lot of it both within Tesla and elsewhere [OpenAI, DeepMind]. He just wants people to recognize that it has downsides, possibly very large [downsides], in the future, and to work on avoiding those.”

Russell compares the brouhaha over AI to disputes over nuclear power.

“You can be in favor of nuclear power while still arguing for research on [reactor] containment,” he observes. “In fact, inadequate attention to containment at Chernobyl destroyed the entire nuclear industry worldwide, possibly forever. So let’s not keep presenting these false dichotomies.”

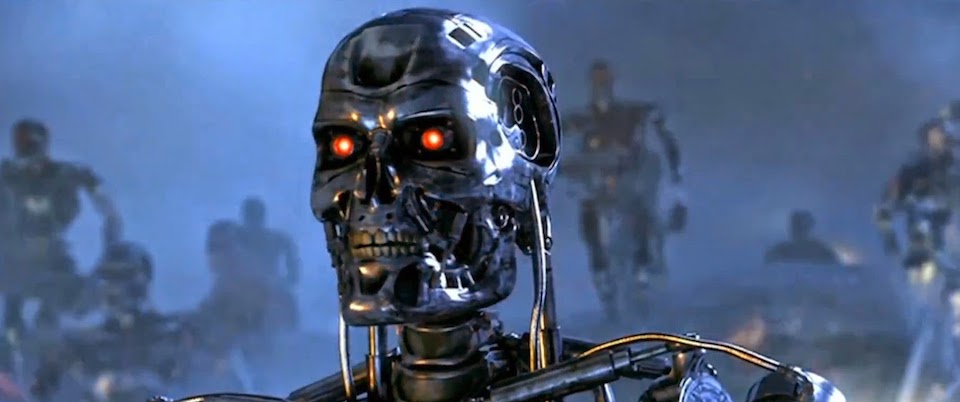

Dylan Hadfield-Menell, a PhD candidate in computer science at Cal who conducts research on AI under Russell and is considered a rising star in his field, said the innate qualities of AI are largely misunderstood by the general public, leading to a distorted understanding of the risks. Rogue AI often is presented as a Skynet scenario a la The Terminator: it achieves sudden awareness, and then decides to kill all humans. That’s not the real problem, says Hadfield-Menell. It’s more a matter of motivating AI improperly, or the AI employing unexpected means to achieve its programmed ends.

“Take a [computer enhanced] vacuum cleaner that you’ve programmed to suck up as much dirt as possible,” says Hadfield-Menell. “It quickly sucks up all the available dirt. So at that point, it dumps out the dirt it already has collected so it can suck it back up again. We run into comparable problems in our work all the time.”

The real problem, says Hadfield-Menell, “isn’t that AI ‘comes alive’ and starts pursuing its own goals. It’s that we give it the wrong motivation, or that it interprets and executes in ways that are unexpected and unintended. When you start looking at systems bigger than vacuum cleaners, critical systems, then you start looking at consequences that could become very dire.”

“You say, ‘Make some paperclips.’ And it turns the entire planet into a vast junkyard of paperclips. You build a super-optimizer, [but] what utility function do you give it? Because it’s going to do it.”

Russell expanded on this issue in an interview published in the magazine Quanta, noting that machines will be able to devise their own AI programs once their intelligence hits a certain level. In recent years,

Russell observed, researchers have “…refined their arguments as to why [this] might be a problem. The most convincing argument has to do with value realignment: You build a system that’s extremely good at optimizing some utility function, but the utility function isn’t quite right. In [Oxford Philosopher] Nick Bostrom’s book [Superintelligence], he has this example of paper clips. You say, ‘Make some paperclips.’ And it turns the entire planet into a vast junkyard of paperclips. You build a super-optimizer, [but] what utility function do you give it? Because it’s going to do it.”

Thus, a super intelligent AI programmed to make itself as smart as possible isn’t likely to dissemble human beings with clouds of nanobots because it views us as despicable competitors who must be expunged; malign intent isn’t part of its programming, and it’s unlikely to develop malignity, a trait unique to higher primates, on its own. Rather, it might disassemble us because it needs our molecules—along with the molecules of everything else on the planet, and ultimately the planet itself—to build a really, really big computer, thus maximizing its intelligence and fulfilling its prime mission.

In other words, it’s nothing personal; it’s strictly business. Of course, the end result is the same, murderous design or not. We still end up disassembled.

So what’s the workaround? That could be challenging, says Russell, because it comes down to human values; we have them, the machines don’t, and something like “be reverent, loving, brave, and true” may be difficult to digitize. But one way or another, it’s imperative that we find ways to infuse human values into our AI overlords, uh, partners.

I think we will have to build in these value functions, Russell said in the Quanta interview. “If you want to have a domestic robot in your house, it has to share a pretty good cross-section of human values. Otherwise, it’s going to do pretty stupid things, like put the cat in the oven for dinner because there’s no food in the fridge and the kids are hungry. Real life is full of these tradeoffs. If the machine makes these tradeoffs in ways that reveal that it just doesn’t get it—that it’s just missing some chunk of what’s obvious to humans—then you’re not going to want that thing in your house.”