For most people, clouds are mere grist for metaphor, as with Joni Mitchell’s “Both Sides Now.” But clouds have deep implications beyond late 1960s pop music lyrics. Geophysical implications. Their frequency, type, direction, density and velocity all say a great deal about weather, climate—even atmospheric ozone depletion. The problem is that it’s hard to draw a bead on clouds, to obtain the precise measurements in real time that can translate into useful data. They are clouds, after all: nebulous, evanescent—indeed, vaporous.

It’s not that scientists haven’t had access to a basic technique for measuring clouds. For decades, they’ve relied on a process known as stereophotogrammetry, which employs two widely-spaced cameras to produce 3-D images of landscapes (and clouds). These photos let researchers identify feature points on clouds, which in turn allow calculations on their development and movement over time. There are a couple of problems, though. First, standard stereophotogrammetry requires a reference point, such as a mountain or skyscraper-filled skyline; that means it’s difficult to employ over the open ocean, where clouds really like to hang out en masse.

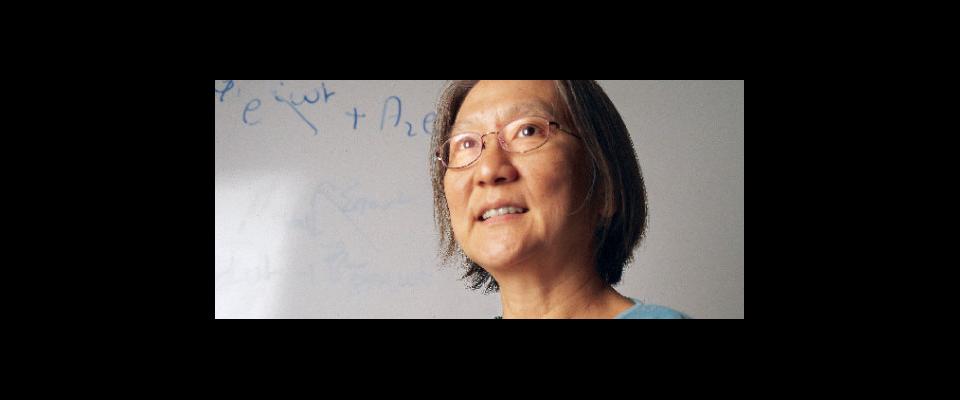

Second, the process is labor intensive, observes David Romps, a scientist with Lawrence Berkeley National Laboratory and an assistant professor in UC Berkeley’s Department of Earth and Planetary Science. To start with, the cameras must be properly calibrated. Actually, make that exquisitely, minutely calibrated.

“You have to set them up precisely,” Romps says. “You have to put them at the exact right distance, know exactly where they’re pointing, figure out the precise optics to use.”

Then comes the hard—or rather, harder—part. Once the cameras take shots of the same cloud from different angles, the feature points of the cloud must be evaluated by human eye and matched by human hand. It’s the parallax—the different angles in the point-of-view between each camera—that allows for a proper 3-D image, says Romps. But figuring out that parallax, matching the rows and flows and feather canyons from one photo to the next, is time-consuming and a strain for wet analogue computers like human brains.

“It can be maddening,” Romps allows.

So Romps, fellow Lawrence Berkeley Lab researcher Rusen Oktem and colleagues from the University of Miami gave stereophotogrammetry a much-needed upgrade. As described in a paper published in the Journal of Atmospheric and Oceanic Technology, they devised techniques that allow for quick and accurate camera calibration. They also developed algorithms that automated cloud feature point analysis, obviating the need for matching images by hand.

The new processes have supercharged cloud research. Cloud formations can now be photographed easily in 3-D, and their development and movements tracked accurately over time. In one experiment conducted in Oklahoma for the U.S. Department of Energy, the researchers matched 35 million feature points on clouds over a three-month period.

“We could never have done that by hand,” Romps says. “These automated techniques are proving really robust, and they’re gaining wide acceptance.”

Moreover, they’re allowing researchers to get down to the real nitty-gritty of oceanic cloud dynamics, particularly the speed of such clouds as they rise through the atmosphere.

But what does this advance mean for stolid groundiings who don’t have our heads in the clouds? Plenty.

“In large part, this work grew out of a disagreement I had with a colleague on typical cloud updraft speeds,” Romps says. “I somehow assumed that was a settled thing, but it turned out there was very little data. That’s because it’s difficult information to get by radar. The best data we have is from people flying planes through the upper half of the troposphere over land, but nobody wants to do that over the ocean because it’s really dangerous. We had oceanic data up to eight kilometers (in altitude), but no real data from 8 to 16 kilometers.”

Supercomputer simulations disposed Romps to believe that oceanic clouds pick up a lot speed as they boil through the upper troposphere. Thanks to the new stereophotogrammetry techniques, his position has been vindicated.

Which is all well and good, but what does it mean for stolid groundlings who don’t have our heads in the clouds? Plenty.

If clouds move fast enough, they can penetrate the stratosphere, saturating it with water vapor. That’s significant, because water vapor is a greenhouse gas: Thus, a really sodden atmosphere can contribute to a warmer planet. Further, cloud updraft speed exerts an influence on the distribution of aerosols –ubiquitous minute particles of dust and chemicals suspended in the atmosphere that can either reflect or absorb the sun’s thermal energy, depending on composition. Clouds, in short, are big players in climate change.

Further, says Romps, “It looks like we’ll eventually have quadruple the amount of atmospheric CO2 than we now have, and there’s a great deal of uncertainty on how much the planet will warm with every CO2 doubling. The range is between 1.5 and 4.5 degrees Celsius (for each doubling). The largest contribution to that spread comes from our representation of clouds. Our basic goal with these cameras is to improve cloud representation in our climate models, and narrow that range of uncertainty.”