Sometimes the public health field is a victim of its own success.

In the Spring of 2001, several leading public health associations launched an ambitious effort to raise the profile of their field. Creating the Public Health Brand Identity Coalition–which I think we can all agree is not the sexiest name for an initiative to promote a sharper professional image–the group commissioned a poll about attitudes toward the phrase public health. Almost 80 percent of Americans, according to the survey, did not think that public health had touched their lives in any way.

That’s an astonishing figure in an age of smoking bans, safe-sex ad campaigns, and fluoridated water—all classic public health interventions. But these are not the kind of things most people think of when they hear the term. “The average person, when you talk about ‘public health,’ they zero in on clinical services for the uninsured and the underserved, and they think about public clinics and public hospitals,” said Dr. George Benjamin, executive director of the American Public Health Association, one of the members of the branding coalition.

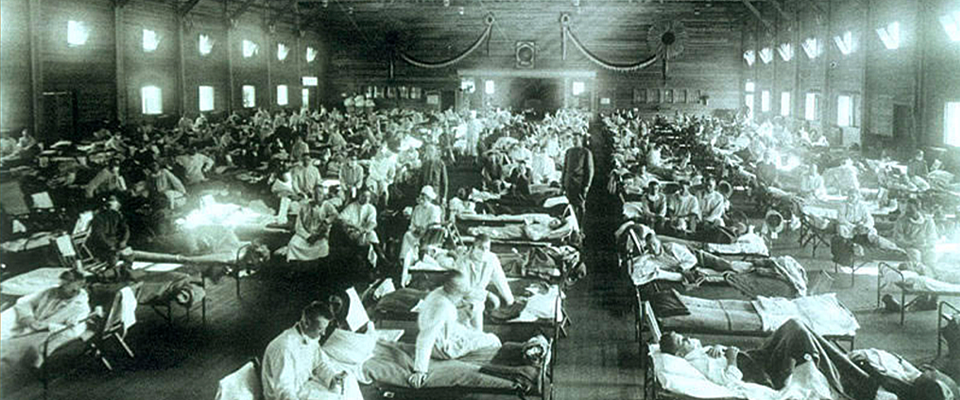

The campaign dissolved in the aftermath of 9/11, when Dr. Benjamin’s APHA and other coalition member groups found themselves deeply immersed in the national response to the attacks. Subsequent events–the anthrax letters, the emergence of new infectious diseases such as SARS and H1N1, clusters of deaths from contaminated food–have focused some attention on the importance of investing in a trained workforce to prepare for and respond to large-scale medical emergencies. Less understood, however, is how crucial public-health professionals and strategies are in sustaining the day-to-day well being of both communities and individuals.

That’s a problem, because greater awareness of such basic and essential concepts as preventive care and shared or pooled risk could help ease what is likely to be the traumatic process of overhauling our health care system. Now that the United States has shed its pariah status among developed countries by actually declaring—legislatively—that people deserve access to insurance, we’ll be relying on public health workers and resources to play a central role in implementing the Obama vision of universal coverage.

Public health suffers from a built-in dilemma. When it works as it should, it’s invisible. Public health interventions seek to prevent bad things from happening in the first place; when such efforts are most successful, you don’t experience the catastrophe that’s been avoided and you don’t realize how awful it could have been. So the impact of public health is felt most clearly in the absence of negative consequences–which in turn reduces awareness of the vital functions it performs.

Vaccination is a great example: Because of the success of mass immunization campaigns in the second half of the 20th century, many potentially fatal infectious diseases, such as measles and whooping cough, have for decades seemed relics of the past. That has led some parents to believe that they can dispense with many childhood vaccines, which they fear–against all reasonable evidence–can cause autism. The outcome has been recent outbreaks among unvaccinated kids of measles and whooping cough, both of which are completely preventable.

So whatever the results of the branding coalition’s 2001 poll, everyone’s life has been touched by public health advances, mostly in ways unseen.

People understand that the treatment of illness is the province of medicine or medical care. They know that when they are sick they should go to their doctor or nurse. Public health practitioners, on the other hand, view situations from a population-level perspective, seeking strategies that bolster overall societal health rather than focusing on individual cases of disease. When he needs to explain this basic distinction, Dr. Benjamin of the APHA said he often cites his experience while training as an ER doc. “I tell people that when someone would come into the emergency room with a rat bite, I took care of the rat bite,” he said. “If ten people came in with rat bites, the best public health intervention I could do would be taking out the rats–solving the problem versus providing clinical care.”

The straightforward concept behind Dr. Benjamin’s illustration is one of the major tenets of public health: It is better to deal with a health threat (rat infestation) “upstream” before it causes disease and illness (many rat bites) “downstream.” All first-year public health students can recount the story of the cholera epidemic that raced through London’s Soho district in 1854, killing more than 600 people. A local physician there, John Snow, believed in the emerging but still controversial “germ theory” of disease; he speculated that water polluted with sewage was the source of the epidemic. By interviewing neighborhood residents and mapping the cholera cases, he eventually identified a particular public water pump on Broad Street as the suspected source. After authorities disabled the pump handle, the epidemic ended completely, although it was already subsiding on its own.

As a reporter at the San Francisco Chronicle in the early 1990s, I covered our last national battle over health reform. It was already clear–from the heartbreaking stories I heard of people who lost their insurance coverage after they or their kids fell ill–that we did not, in fact, have a functioning health care system. Today, from my current vantage point at Berkeley as the coordinator for a new concurrent masters program in both public health and journalism, I struggle to understand–and explain to students–how we as a society could have let things deteriorate so much since then.

Those who opposed the Democrats’ health care push appear to feel no embarrassment that the United States has recently ranked 30th out of 31 countries in child mortality rates, according to the Centers for Disease Control–that’s 22 places behind Portugal, 3 places behind Cuba, and ahead of only Slovakia. Instead, these opponents keep braying and bragging that U.S. health care is the envy of the world. And for those with access to American medicine’s most advanced and high-tech interventions–like, say, members of Congress–the claim may hold true. But if you believe that all people deserve a chance to enjoy good health, then you must think about what’s available to the least as well as the most advantaged among us, and ask yourself: “How can we share resources more fairly so that everyone has access to basic preventive and primary care?”

As a nation, we suffer from an appealing delusion: that rugged individualism and self-reliance define the American character and represent the source of our country’s greatness. Now, I have nothing against rugged individualism and self-reliance—better to possess those traits than not, I suppose. But the national fetish for Horatio Alger heroics and “pull-yourself-up-by-your-bootstraps”-ism implies that everything is possible for those who work hard, and that those who cannot overcome obstacles have only themselves to blame. This is preposterous, of course; it obliterates the weight of historical injustice in shaping today’s discriminatory landscape. It also ignores what public health researchers call “the social determinants of health”–the factors that influence patterns of disease and wellness in the first place: the neighborhoods where we live, our socioeconomic status, the quality of our food and water supplies, and our access to education and information.

Nonetheless, for reasons of history, culture, and some weird national sociopathy, we have been willing to tolerate levels of health care inequality unique in the developed world. No health insurance? Sorry, not my problem! Your kid has cancer? Too bad, get a job! Or, as our last president–the compassionate conservative–famously declared, “[P]eople have access to health care in America. After all, you just go to an emergency room.” Anyone who has ever spent time in an urban ER knows how ridiculous that statement sounds.

And to make a possibly impertinent observation, emergency rooms are designed to handle actual emergencies, not the runny noses, allergies, sprained ankles, and other primary care needs of the tens of millions of Americans who aren’t president, don’t live in a beautiful mansion at taxpayer expense, and don’t have insurance at all, much less gold-plated coverage paid for by the federal government. However much right-wing pundits complain about the sausage-making messiness of the legislative process that produced the health care bill, the enactment of a reform package guaranteeing access to all represents a profound shift in national perspective.

The bill is far from perfect; for one thing, I’d prefer a single-payer system to the prospect of delivering tens of millions of new customers to an industry that’s helped create the swamp we’re in. But by promising to cover everyone (oh, except the undocumented, who harvest our crops, raise our kids and construct our houses), the legislation is embracing a comprehensive, public health–oriented approach. And the ban on charging more or denying coverage altogether because of a “preexisting condition” is applying population-level strategies to the vexing problem of delivering medical care to individuals; bringing everyone into the insurance pool spreads the financial risk around so that no one is bulldozed by unaffordable medical costs.

It’s not at all surprising that the ferocious heart of the health-care debate has been outrage over the mandate that everyone purchase insurance. I understand why this upsets the Tea Partiers, given their distrust of the federal government and their belief that the bill will bankrupt the country. I get that they see forced participation as an infringement on the personal freedom they view as their birthright. So I’m thrilled about the money the country will save when these rugged and self-reliant patriots refuse to accept those government handouts known as Medicare and Social Security.

Beyond ensuring access to insurance, the new legislation incorporates an expansive view of health and well being–or at least a view more expansive than we’re used to. It acknowledges the obvious: that maintaining healthy communities means more than providing medical care for individuals, however crucial that step.

As Dr. Stephen Shortell, dean of the School of Public Health points out, the bill allocates about $10 billion for prevention and wellness over five years. It also supports education and training to bolster the ranks of the public health workforce, which in recent years has been severely stressed by increased demands and inadequate resources. “This is the first time in a long time that we should have additional financial support for our students,” he said.

The legislation mandates such health promotion measures as requiring chain restaurants to include calorie information on menus, and directing insurers to fully cover recommended screenings and vaccinations. These and other aspects of the overhaul represent significant improvements over current practices. The more we deploy such broad-based strategies to mitigate what are called “structural barriers” to health care access, treatment, and information–including barriers arising from poverty and discrimination–the more of a genuine public-health system we can claim to have.

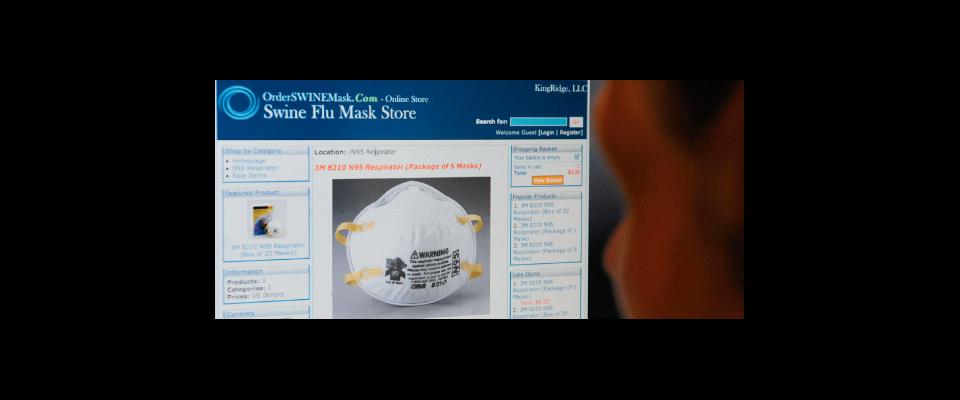

This fall, I’ll once again teach my regular journalism course on health reporting. On the first day of class I’ll ask the students what I always do: Can anyone explain the difference between public health and medicine? Despite all the recent developments and speeches and news articles about health care reform, I suspect that the students will stare at me blankly, as they usually do when I pose that question. One or two might venture a comment about public STD clinics or Medicare, or maybe they’ll ask if I’m referring to swine flu.

So hey, public health associations! Seems like now might be a good time to rev up that whole brand-identity-coalition thing again.