From the beginning, it was an ambitious idea. Computer automation would remove the taint of human emotion or prejudice from everyday life. Algorithms—the series of instructions that tell computers what to do—would make important decisions about everything from hiring to health care.

The reality, as Ziad Obermeyer discovered, is not quite that simple.

The associate professor of health policy and management in the UC Berkeley School of Public Health has spent much of his career studying algorithms. “Algorithms can do terrible things, or algorithms can do wonderful things. Which one of those things they do is basically up to us,” Obermeyer says. “When we train an algorithm, we make so many choices that feel technical and small. But these choices make the difference between an algorithm that’s good or bad, biased, or unbiased.” In other words, algorithms are only as impartial as the humans who built them and, sometimes, they replicate the very biases they were designed to overcome. The systemic and repeatable errors that create unfair outcomes, such as privileging one group over another, known as algorithmic bias often exacerbates existing racial inequities in many areas, including health care.

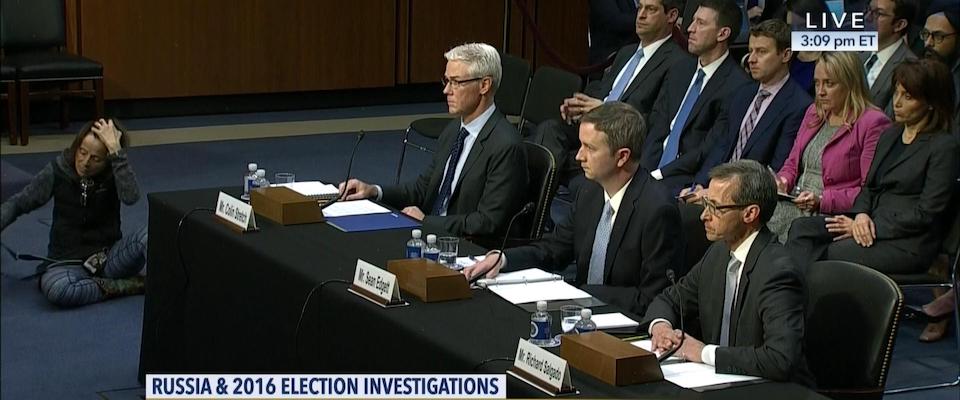

Last year, Obermeyer saw this dynamic play out in stark terms when he dug into a code used by medical centers and hospitals around the country to ensure that high-need patients gain access to a care management program that allocates additional medical resources.

According to the algorithm developed by a major health care group to score some 100 million Americans, only 18 percent of the patients identified as needing more care were Black; meanwhile, 82 percent of those “needing care” were white. The true reflection of need was really somewhere between 46-54 percent African Americans, Obermeyer and his team reported.

So how did the algorithm get the numbers so wrong?

Virtually all health systems have VIP care management programs that include things like a designated call-in phone number, priority for primary care appointments, and nurses who make house calls. These high-touch care programs make patients happier and healthier and reduce costs by preempting visits to the hospital or ER. But they’re also expensive, so medical providers are very selective about who gets in. That’s where the algorithms come in. Periodically, hospitals run everybody in their databases through the program and assign a “risk score” based on a number of factors including age and health conditions. Anyone with a score of 97 percent or higher is automatically accepted into the VIP program.

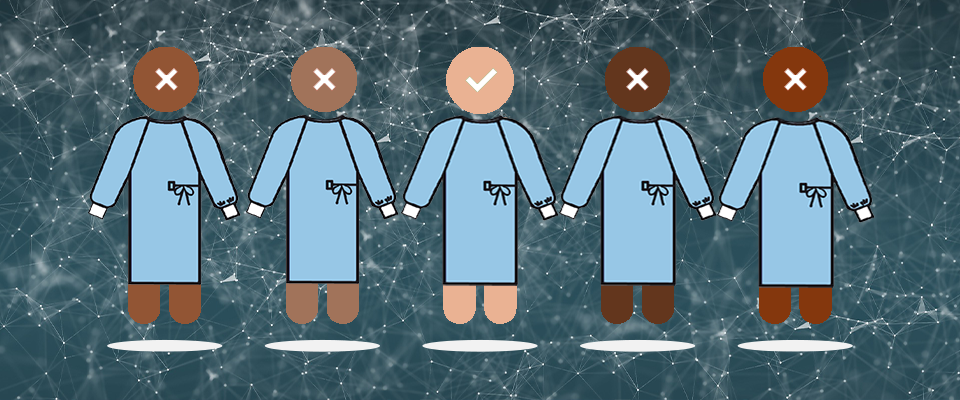

“One assumes that two people with the same score are treated the same way regardless of color, right?” says Obermeyer. “But at any given score, if you compare a Black person and a white person, on average, the Black person was considerably sicker and had a higher need. But the [algorithmic] bias allowed healthier white patients to essentially cut in line ahead of sicker Black patients.”

When and where did bias infiltrate the equation? At the very beginning, says Obermeyer, when the designers made sickness synonymous with money spent.

Rather than using the underlying physiology to determine who was at high risk of worsening health, programmers relied on data from insurance claims to calculate a patient’s health care spending. The more money patients spend on health care, the sicker they are, right? Not quite. There’s an inherent flaw in this logic: White people have more disposable income, more and better insurance, and greater access to medical providers. As a result, they spend more on health care than Black people with identical health issues.

“We tend to blame algorithms for a lot of problems but the algorithm is just basically holding up a mirror to us,” says Obermeyer.

“Because of the structural inequalities in our health care system, Blacks at a given level of health end up generating lower costs than whites,” Obermeyer said. “As a result, Black patients were much sicker at a given level of the algorithm’s predicted risk.”

Obermeyer’s study, published in Science in October 2019, highlights the importance of carefully assessing and eliminating biases in the artificial intelligence models. Fortunately, in this case, there was an easy fix for the problem and, when he and his colleagues at the University of Chicago’s Booth School of Business alerted the software manufacturer to their findings, the company was eager to correct the mistake.

It turns out that the algorithm had failed to account for nearly 50,000 chronic conditions experienced by Black patients. When Obermeyer rejiggered it to use patients’ biological data rather than health care spending, that number dropped to fewer than 8,000; the update reduced bias by 84 percent and nearly tripled the number of Black people in the automatic enrollee group.

“The original algorithm was a tool for preserving the status quo,” says Obermeyer. “If you were spending a lot of money last year, then you were going to be first in line for this program that spends even more money on you the next year. By targeting the algorithm to find people who really need help, you’ve turned it from a tool that reinforces inequality to one that fights it.”

Obermeyer knows his research only exposes the leading edge of a widespread problem. He believes that the formulas being used to allocate health care resources during the COVID-19 pandemic may also be biased against people of color. Because these communities have less access to testing, he says, the infection rates are probably much higher than the numbers suggest, putting these populations in greater need of care and resources.

Because marginalized groups also have higher rates of comorbidities for COVID-19 like diabetes or cardiovascular disease, he is concerned that efforts and algorithms that direct limited health care resources to healthier COVID patients will only exacerbate existing racial disparities.

Still, Obermeyer sees many reasons to be optimistic about the role algorithms can play to make our health system more equitable and just, with careful oversight from the people who build them.

“We tend to blame algorithms for a lot of problems but the algorithm is just basically holding up a mirror to us,” he says. “It’s really hard to get humans to stop being biased but with algorithms, you just rewrite the software, flip the switch and overnight, they become far less biased.”