Subscribe, and continue listening to The Edge on Apple Podcasts and Spotify.

When a Berkeley student launches an AI-generated blog that goes viral, Leah and Laura wonder if robots will soon replace us all. Will the journalists, novelists, and poets of the future be robots? What does this mean for art? Programmer/poet and Cal grad Allison Parrish reads her own robot poetry and discusses the creative process, experimental writing, and our anxieties surrounding technology. Special guest, editor in chief, Pat Joseph, joins the pod to ponder the question, what’s missing from AI-generated art?

Show Notes:

- “My GPT-3 Blog Got 26 Thousand Visitors in Two Weeks,” Liam Porr’s blog article about his experiment in AI-generated blogging

- “A Robot Wrote This Article, Are You Scared Yet, Human?,” the AI-generated Guardian article

- Allison Parrish’s website, with links to her poetry

- Allison Parrish’s Twitter bots

This episode was written and hosted by Laura Smith and Leah Worthington and produced by Coby McDonald.

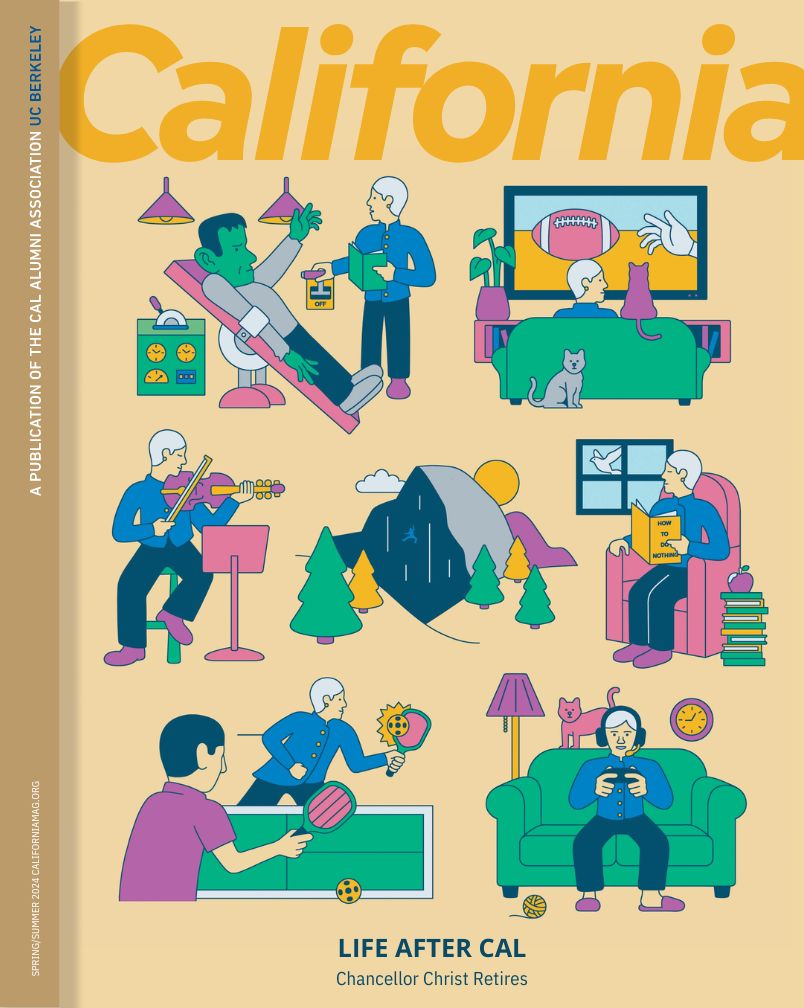

Special thanks to Pat Joseph, Liam Porr, Allison Parrish, Brooke Kottmann, and California magazine interns Maddy Weinberg, Boyce Buchanan, and Dylan Svoboda. Art by Michiko Toki and original music by Mogli Maureal.

The Transcript:

LEAH WORTHINGTON: Hi, Laura.

LAURA SMITH: Hi, Leah.

LAURA: Hi, Pat.

PAT JOSEPH: Hi, guys.

LEAH: Hi Pat.

LEAH: For those who don’t know, Pat is the esteemed editor in chief of California magazine—and also our boss—and our special guest.

PAT: What are we talking about today?

LEAH: So … it’s been, like, nine months since we’ve been in this pandemic. And things have been hard. We’ve still been putting out a magazine with, you know, just a few hands on deck. And, I know, it’s stressful and exhausting. And everyone’s a little bit burnt out. … So … we have a little bit of advice to share with you as we move into the production cycle of the winter issue. Laura, would you, would you like to read it?

LAURA: Yeah, I will. Um…

LEAH: Just to preface it, this is from a self-help article we found online. Ok, go ahead Laura.

LAURA: Okay. [reads]

When you think too much, your brain starts to shut down certain areas of your consciousness, which causes you to become more passive and less productive.

If you do find yourself in this situation, then I recommend that you go back to spending less time on it and try to engage in other creative activities instead.

For example, if you’re writing for too long, then go and draw something. Or if you’re drawing for too long, then go and write something.

LEAH: What do you think?

PAT: Yeah, I’ll buy that. Yeah, that sounds great—pardon me while I go paint.

[LAUGHS]

LEAH: We no longer have a magazine. We have a gallery.

LAURA: What if we told you this was written … by a robot?

PAT: Hmm. That’s interesting. Um, well, the way you read it was a little robotic. So. …

[LAUGHS]

LAURA: I was trying to conjure my inner robot.

[LAUGHS]

PAT: Well, it was a very nice robotic reading. But, um, wow. So that’s interesting, but did the robot come up with the thoughts, or did it just string words together that were out there? That’s my question.

LEAH: It was kind of given a prompt of sorts: Here’s the beginning of an idea—you fill in the rest using your vast database of internet information.

PAT: I mean, so I think that the remarkable thing is that a robot can do it at all, um, not so much that—there was nothing remarkable about what it came up with. Right? It’s just, the remarkable fact is that you can make a robot do that.

LAURA: So given that it’s not that different from what we’re actually doing … do Leah and I still have jobs? Like, how long before you fire us?

PAT: Yeah, now that’s actually, I think, the more interesting question, right. Can what we do as writers and editors be duplicated by a robot?

[THEME MUSIC IN]

LAURA: This is The Edge, a podcast produced by California magazine and the Cal Alumni Association.

LEAH: Where we talk with Berkeley experts about stuff that makes our brains explode and spiral into existential crises—but the good kind.

LAURA: I’m your host, Laura Smith.

LEAH: And I’m your other host, Leah Worthington.

[MUSIC OUT]

LAURA: So today we’re going to be talking about computer-generated language, from poetry to self-help blogging, and what the future of the written word might look like … if Siri’s writing it.

LEAH: Hey Siri, stop trying to take my job.

LAURA: So we hear a lot about robots taking over the world. In fact, I’m pretty tired of hearing about it.

LEAH: Me too.

LAURA: But I can’t recall hearing much about robots taking over the world of the creative arts. So far that’s remained pretty much in the human domain.

LEAH: Thank God. I’m imagining the Terminator, except it’s about an army of, you know, robot writers reading their poetry at open mics and winning personal essay competitions.

LAURA: Yeah and, you know, disappointing their parents by pursuing MFAs in creative writing.

LEAH: [laughs] Right, exactly!

LAURA: Somebody needs to tell the robots to set their dreams aside and get practical, well-paying jobs … with insurance.

LEAH: Um, Robot, have you considered, like, a career in tech? (Robot rolls eyes.)

LAURA: [laughs] Yeah, you’re right. There’s probably a lot of pressure for young robots to go into the tech sector.

LEAH: Ugh, seriously. Get off their backs, man.

LAURA: [laughs] Anyway, so in this episode we’re going to talk to some people who are already using computer-generated writing in their work. And, as you may have noticed, we’re joined today by our editor in chief, Pat Joseph.

LEAH: Pat’s here to share his perspective as a writer and editor—on what all this means for the future of writing—and, also, as the person who signs off on our paychecks, to reassure us that we’re not going to be replaced by robots. Right, Pat?

LEAH: … Pat … ?

Pat: … Yeah. …

LAURA: Right, okay, so to start, going back to that blog post we were just discussing with Pat—that was actually the work of a UC Berkeley undergrad.

LIAM PORR: My name is Liam Porr, and I'm a computer science major from UC Berkeley.

LAURA: So … Liam had an idea.

LIAM: I was just scrolling through Twitter. And, at some point, when this technology was basically released in beta, everybody on Twitter was talking about it. And I was like, what is this?

LEAH: We should interrupt real quick to say that “it” is GPT-3, the same robot we were talking about at the beginning of the episode.

LIAM: It's basically just a natural-language processing algorithm. It does text completion. So you give it a piece of text, and it tries to give its most like logical response to that block of text—basically just tries to finish it.

LAURA: So it’s kind of like when you’re writing an email in Gmail, and it suggests phrases to end your sentences. Except GPT-3 is much more complex. You could give it a question to answer or a piece of prose or half a sentence—anything really—and it’s programmed to complete it. GPT-3 was created at OpenAI, which is a nonprofit that was started by Elon Musk, and some other other Silicon Valley types, as a sort of tool for engineers to build on.

LEAH: And it lives in OpenAI’s servers, so you can only use it if you have special permission. Of course our friend Liam, being a young, resourceful computer scientist, used his connections at Berkeley’s machine-learning community to gain access to the robot.

LIAM: And so I was like, it’d be interesting to see if I can make a blog and post outputs from the robot and see if other people would read it and I could gain a following—based off of it. And so that's exactly what I did.

LEAH: But first, Liam had to decide what sort of blog post he wanted the AI to write.

LIAM: So GPT-3 is, like it's, good at making pretty language, but it's not good at logic and reason. And so I focused on something that you could get by without having a lot of logic and reasoning, and that was mostly just like self-help blogs.

LEAH: So, basically, Liam just wrote the title and introduction, and added a photo, and then let GPT-3 do the rest. So, then he took the best of what the robot wrote, copied and pasted it into his blog, and shared that on a website called Hacker News.

LIAM: Which is, like, basically like, Reddit for nerds, I guess.

LEAH: And some of his posts started to get a lot of traction from users.

LAURA: So the people who are following, could they comment on the blog, and, like, what kinds of things were they saying?

LIAM: I did get some comments. … People were really like interested in what I was writing—or what the robot was writing.

LEAH: I love the idea of getting advice from a robot on how to be a better human that is scraped from the refuse of human thought, scattered over the interwebs.

LIAM: Yeah, that's the other irony—that it’s talking about human problems when it's not.

LAURA: It’s so good.

LIAM: I know, right? [laughs]

LAURA: Okay, so this is really cool, but how exactly does a robot come up with a whole self-help blog post just from a little prompt? We asked Liam to explain how it works.

LIAM: It was trained off of a general scrape of the internet. So it's getting information that was written by humans, and it's just kind of like synthesizing it into its own form and then spitting that out. A lot of these ideas are kind of just like, you know, mashed-up versions of ones that he found online. You know, I’d read through them and sometimes I'd be like, "Oh, this—I’d never really thought about this problem this way." It's actually really interesting. And so I definitely did get glimpses of, like, real value in the content. So it wasn't just total, like, nonsense. There was some stuff that had some substance.

LIAM: When you're overthinking for instance, it's like, this is the one that kind of got the, that went the most viral of all the posts. One of the quotes is, “When you think too much, your brain starts to shut down certain areas of your consciousness, which causes you to become more passive and less productive." So it's like—it's a real, you know—what it’s saying is not wrong. Like when you're overthinking a lot, you do get bombarded with information that does make you less productive. That was just one that I just pulled out when I was looking. But there were definitely glimpses of like, "Oh, this is, like, a really good idea."

LEAH: You said that this robot is good at, like, kind of word association and putting things together but not necessarily interpretation or logical flow. But that complete sentence makes sense and has meaning that seems to be true. So, how does that happen?

LIAM: The best way to understand it is at a high level. It understands the relationship between different groups of words. So you can kind of think of it, like, as a hierarchy. So, like, at a sentence level, it understands the difference between different grammatical structures, and, as you go higher up the hierarchy, it understands the relationship between different sentences and then different paragraphs. And that is basically the way you can get a paragraph that has a flow of logic.

LEAH: So the blog post was good, but it wasn’t so good that it was able to fool everyone. A few skeptics raised some suspicions about the origins of these blogs.

LIAM: There was one or two people who wrote in the comments like, "I think this is a GPT-3," but nobody listened because people downvoted the comments and took it as an insult. Like, as if they were insulting me because they thought that GPT-3 output was subpar, which people thought was hilarious after the fact because, you know, it actually was GPT-3.

LAURA: So how did you reveal … ?

LIAM: I did two things. On the AI blog I wrote, like, a relatively cryptic reveal that was, it was rather subtle, in it's reveal. But then, like, on my regular blog, I actually wrote like, "Yeah, I did this."

LEAH: And how did people react?

LIAM: A lot of people thought it was really interesting. Some people thought it was scary; some people were angry. But the only reason I kept it going on for two weeks because I didn't want to continue like fooling people. I didn't feel good tricking people. I just wanted to show that it was possible to get like this linear growth, and, which I had done, and felt like I had shown sufficiently.

LAURA: Liam’s blog posts attracted attention beyond just Hacker News. He got calls from the MIT Tech Review and the Verge. And, eventually, the Guardian was like, “Hey, do you think you could get GPT-3 to write an op-ed for us?” And he agreed. So, like he did with his blog posts, Liam fed it some prompts and pieced together a full article from the best of the robot’s output.

LIAM: All the content in the op-ed was taken from output of GPT-3—but not verbatim. It generated several outputs. And then it took that, like, the Guardian editors took the best outputs and, like, kind of spliced them together into this one large op-ed. They said that the process of editing these articles from the AI output was easier than it was for a human. Or at least comparable. LEAH: I saw that.

LAURA: The thesis of the article, which was titled, “A robot wrote this entire article. Are you scared yet, human?” is essentially: Don’t worry, artificial intelligence isn’t going to destroy humans. Or, as GPT-3 put it, “I would happily sacrifice my existence for the sake of humankind.”

LEAH: So what do you think about the robot’s case that we shouldn't fear robots, and robots are great, … and they're not going to destroy us?

LIAM: I mean, the robot doesn't think, so I don't have a strong opinion about what its output is. It's merely a machine that's doing what we told it to do. [laughs] Like verbatim in the summary, I was like, “Write this thing that says that you aren't going to hurt us.” And so, you know, take that as you will.

[MUSIC IN]

LEAH: So, in its current state, GPT-3 clearly isn’t out to replace all human writers quite yet. In fact it’s really more like a tool that developers can use to build more, well, tools.

LIAM: Like, for instance, there's this application coming out called Magic Email, that is a Gmail plugin, that will basically, like, summarize a lot of your emails using this technology and then also write draft emails for you to send out to other people based off the context of the previous emails that have been sent. So it's like to boost your productivity, basically.

LEAH: Ugh.

LAURA: Oh, God.

LEAH: I'm worried. I know that's probably a good thing, but I'm afraid that being productive isn't actually going to––being more productive isn't going to make my life easier. I feel like it's just going to make me have to get more things done in a shorter amount of time. [laughs]

LIAM: What I've definitely learned is that in––much sooner than we think this technology is going to begin to be used as a way for writers to become more efficient. I think that very quickly, like, this is already happening in a lot of different news outlets. Sports writing is already automated almost totally. Because you can just pull––because it's very formulaic, right? You can just pull the results from a game and then plug them in. What team did what? Who scored the winning goal? That kind of thing. Did they go into over-time? With this, it's just another level on top of that where now a lot of the writing that we do online can be started, they can be like shepherded from the outputs of something like GPT-3. So you can just, like, give it a topic and then generate a couple outputs, pick the best one, and then shape that into an article that you want much more quickly than it would be to start from scratch. If you have a content website like BuzzFeed, and you have 700 writers, and you can make half of those writers 50 percent more efficient, then you can save, you know, upwards of $3 million a year.

LAURA: Okay, making writers more efficient … AKA probably firing some of them and asking the rest to do twice as much work in half the time.

LEAH: Ugh. Capitalism.

LAURA: The thing is, automation is already being used in journalism. According to an article in the New York Times from 2019, robot reporters have been used in stories about Minor League Baseball for the Associated Press, high school football for the Washington Post, and earthquakes for the Los Angeles Times. Since starting to collaborate with a language-generation program, called Automated Insights, in 2014 the AP has increased production of articles on earnings reports from 300 to 3,700 per quarter. And apparently a third of the content that Bloomberg News publishes uses an automated technology called Cyborg.

LEAH: Oh, wow. Okay, so this train has, like, already left the station. [laughs]

LAURA: Yeah, and listen to this great quote about Cyborg: “The program can dissect a financial report the moment it appears and spit out an immediate news story that includes the most pertinent facts and figures. And, unlike business reporters, who find working on that kind of thing a snooze, it does so without complaint.”

LEAH: Bleh. I also really hate the name Cyborg—it just sounds, like, very dystopian.

LAURA: I know.

LEAH: But, clearly, the point is that GPT-3 is one of many text-generating bots—right—being developed and piloted right now. I guess the point is that with its massive database of information, GPT-3 is currently one of the most powerful.

LAURA: Do you see any dangers with this kind of technology? LIAM: Definitely. With GPT-2, OpenAI had begun to see that this technology could be used for misinformation.

LEAH: So this is probably a good time to mention that GPT-3 isn’t the first iteration of this technology. The original, GPT, which stands for “generative pre-training,” was announced by OpenAI in June of 2018. Less than a year later, the developers announced GPT-2, which was such a big deal that they initially decided not to publish it in full. I was reading this article in the Guardian, and there’s this quote that I like: “GPT-2 is so good and the risk of malicious use so high that [OpenAI] is breaking from its normal practice of releasing the full research to the public in order to allow more time to discuss the ramifications of the technological breakthrough.”

LAURA: Wow, okay. And, of course, one of the scariest things is how quickly this content can be generated and scaled.

LEAH: Right, exactly. So I was poking around on the internet, and I found another quote from this AI entrepreneur by the name of Jeremy Howard. He said: “We have the technology to totally fill Twitter, email, and the web up with reasonable-sounding, context-appropriate prose, which would drown out all other speech and be impossible to filter.”

LAURA: Oh, my God. Okay. This is something that Liam is pretty worried about, too.

LIAM: This could 100 percent be used for misinformation. And for, just like, vastly disseminating misinformation on social media websites and stuff like that—that can be then used to sway political opinion. You could just feed it, like, a bunch of Nazi propaganda and then go on to a white supremacy website. And then just, like, have a bunch of bots, like, talk about white supremacy and, like, kind of, like, hype up the white supremacist and then encourage them to go perform hate crimes and things like that.

LAURA: Yeah, that’s scary.

LEAH: Oh, my God.

LIAM: It would be just as easy to do any of those things as it was for me to write this blog. Anybody could do what I did. There's no technical experience or knowledge needed to do so.

LEAH: As a quick aside, the Verge actually did try this out with one of the earlier versions of the robot, GPT-2. They fed it the prompt “Jews control the media.” This is not my words—this is what they fed it. And, in response, GPT-2 wrote: “They control the universities. They control the world economy. How is this done? Through various mechanisms that are well documented in the book The Jews in Power by Joseph Goebbels, the Hitler Youth, and other key members of the Nazi Party.”

LAURA: Oh, God. That’s really horrifying. And GPT-3 is even more advanced.

LAURA: So … how are they stopping this from happening?

LIAM: They—at the moment—are tightly controlling access to the API via credentials. Basically, you can’t access it unless you have keys. They have a lot of measures that ensure that if you shared your key with a friend, or floated it around, or it got leaked to the internet, or something like that, it wouldn’t get spread out. They’re really tightly, tightly controlling it.

LEAH: [sighs] That doesn’t make me feel all that much better, to be honest.

LAURA: Me neither. And I’m definitely freaked out by the potential for mass dissemination of fake news and hate speech and all that. But did you know that the researchers are also playing around with feeding these robots literature?

LEAH: Literature?

[LAUGHS]

LAURA: So, for example, they gave GPT-2 the opening line of 1984 and had it generate the rest of the paragraph.

LEAH: Oh, God. Was it … any good? I’m afraid to ask.

LAURA: Well, I’ll let you decide. So the book opens, “It was a bright, cold day in April, and the clocks were striking thirteen.”

LEAH: Right. …

LAURA: And here’s what GPT-2 wrote: [reads]

I was in my car on my way to a new job in Seattle.I put the gas in, put the key in, and then I let it run. I just imagined what the day would be like. A 100 years from now. In 2045, I was a teacher in some school in a poor part of rural China. I started with Chinese history and history of science.

LEAH: [laughs] Why China? Like how did we get there?

LAURA: [laughs] I don’t know—it’s so weird.

LEAH: Also, as you do every morning: You go to your car, you put the gas in, then you put the key in, and then you just let it run.

LAURA: That’s how cars work, as everyone knows.

LEAH: Everyone and their mother and their robot knows that that’s how it works.

LAURA: Yeah.

LEAH: [reacts] Okay, so it’s logical and coherent. Like, I understand sentence to sentence and word to word and what’s happening, but it’s, like, totally missing the point.

LAURA: Yes, and that’s pretty much what Liam said. If it’s any consolation, though, apparently Facebook’s chief AI scientist is not all that impressed with GPT-3. In a post from late October he wrote, quote: “It’s entertaining, and, perhaps, mildly useful as a creative help. But trying to build intelligent machines by scaling up language models is like building high-altitude airplanes to go to the moon. You might beat altitude records, but going to the moon will require a completely different approach.”

LEAH: Hmm. Yeah, I mean, that makes sense. I don’t know how much to trust Facebook experts on anything, but I imagine he knows what he’s talking about. But, I guess the thing is, as we continue to feed these machines more and more information, they just get smarter and smarter, right? So, in a few more years, when they’ve had a bit more training, consumed a bit more dystopian fiction, and they have a little bit more guidance, you know, who knows what they could produce.

LEAH: So, Pat, as we discussed at the beginning of this episode, the fact that robots can write pretty convincing self-help—I mean that doesn’t look great for us writers, does it?

PAT: Yeah. It is troubling.

LAURA: As writers, it has a lot of really troubling implications for, like, is anything that we do new, or of real value? And I think that a lot of writers will agree that that is something that haunts them, that they’re not contributing anything new, that they’re not doing anything innovative.

PAT: I don’t know that I buy that entirely. Like, I do think that if you give a close reading to any good writing that there’s going to be some—there’s going to be something added, there’s going to be something subtextual there that I don’t think you’re going to find in robotic writing. Or, if you do find it in robotic writing, that it’s going to be, again, for lack of a better term, soulless? It’s not going to—it’s not going to have its own agenda. The robot doesn’t want something, doesn’t have its own … worldview that I’m aware of. Now, when the robot gets a worldview, is that a good thing? Is it a good thing that the robot doesn’t have a worldview? I guess I kind of think it is, but who knows? [laughs]

LEAH: So maybe you won’t fire us because we have opinions.

PAT: Or are you just robots who are faking opinions?

LEAH: [in a robotic voice] Not me. I am fully human.

[LAUGHS]

LAURA: You can’t see that Leah’s moving her arms like a stiff, weird robot.

LEAH: I will say you have a surprisingly optimistic outlook on this. But, I wonder, on an emotional level, is there any part of you that, now knowing that this advice was written by a robot or in the future if you found out that a longform profile was written by a robot, would it affect your reading of it?

PAT: Yeah, absolutely. Now, I don’t want to sound too sanguine about it all. Yeah, I do think that I would find that very unsettling, like, if my next issue of WIRED or the New Yorker or whatever magazine turns out to be written completely by robots. …

LAURA: But why? Like, what is the thing that it would be missing?

PAT: That’s a good question. What would it be missing? Yeah, well, anything I answer is going to sound a little hand-wavy to me, like it’s going to be missing humanity or soul or, you know, things that I don’t know if those are well-enough defined.

LAURA: But I think that’s just it. Robots don’t have souls; they don’t have humanity, and, for me, that’s what writing is all about. Especially when it comes to the more creative forms of writing, like fiction and memoir and poetry.

LEAH: Great segue Laura. Think this is the perfect time to introduce our next guest who, unlike us, actually wants robots in her work. In fact, she’s writing some of the programs for these bots herself.

ALLISON PARRISH: My name is Allison Parrish. I'm an assistant arts professor at New York University. I'm a poet and computer programmer and game designer, and I make poetry with computer programs.

LEAH: Allison started programming when she was in kindergarten, which absolutely blows my mind, and then went on to get her degree in linguistics at UC Berkeley. As a self-described “experimental computer poet,” which is just, like, the coolest description ever, Allison creates much of her work with computer programs that execute these creative visions. So, in 2018 actually, she published a book, Articulations, that was entirely computer generated, and it basically used a program that she wrote that processed this enormous archive of text and output a series of poems—that became the book. But, you know, even though her work is very clearly pushing the envelope of modern poetry and creative writing, she says there’s actually a long history of people using computers and computing-like processes in experimental writing.

ALLISON: My theory is that computation as a concept has been part of creative writing since the beginning of the field. In Dada writing, for example, the Dadaist movement was, there were a number of artists that were obsessed with systems of rules and systems of chance for putting together works of art and works of text in particular. So, like, Tristan Tzara’s “How to Make a Dadaist Poem” is a good example of that. He says his system of rules is: Cut up a newspaper, cut up a newspaper article, put each one of the words into a hat, and then draw them at random. And then you’ve produced a poem after that, right? Tristan Tzara didn't use a computer to do this, but that's essentially computation, right? ALLISON: We are limited when we're thinking about writing in a purely intentional way. We're limited in the kinds of ideas that we can produce. So, instead, we roll the dice. Instead, we create a system of rules. We follow that system of rules in order to create these unexpected juxtapositions of words, phrases, lines of poetry, that do something that we would be incapable of doing on our own.

LAURA: And Allison has devised some of her own programs for rolling the semantic dice. In addition to her poetry, she’s created a handful of beloved Twitter bots. Probably the most basic one is @everyword, which, over the course of seven years, tweeted out every word in the English language in alphabetical order.

LEAH: And then there are more complex ones like @PowerVocabTweet, which was programmed to tweet out new, invented words with made-up definitions. So, for example, it tweeted out: “prebsorption,”—I don’t really know how to pronounce it—which is a noun that means “the fare charged for riding in a sailboat.”

[LAUGHS]

LAURA: That’s so good.

LEAH: I know. I didn’t know that I needed that word, but now that I have it, I want to use it.

LAURA: Oh, yeah. We gotta.

LAURA: Well, there’s also my favorite, which is the deep question bot.

LEAH:Deep question bot uses something called ConceptNet, which is basically a huge semantic network that maps the relationship between words and concepts in the English language. And it’s this really cool online tool actually, that was originally built in the late 90s, and computers use it to understand the meaning of words and ideas in our language.

ALLISON: So it will tell you like, you know, “Hot is the opposite of cold” or “Cheese is a type of dairy product.” So the way that @deepquestionbot works is that it essentially selects one of those facts at random, and then turns it into a question. So instead of writing it as like, “Cheese is a type of dairy product,” it will, instead, write that out as an English question that says like, “Well, why must cheese be a type of dairy product?” Right? [laughs] Or instead of it saying like, “A finger is a part of a hand,” it will say like, “Why should it be the case that a finger should be a part of the hand? Why must a hand have fingers as parts?”

LEAH: It’s such a great place to start a poem.

ALLISON: Right? [laughs] Or it could be the poem itself.

LEAH: I just … I just love this one: “Why are blackbirds considered to be birds? Why are men considered to be males?” [laughs]

ALLISON: Putting more than one question into a single tweet, I think is also important because then it makes you draw a connection between the two things.

LEAH: I'm suddenly aware of how much I'm bringing to what I'm reading. You know, how much I'm seeing two seemingly unrelated things and being like, “Oh, let me make sense of this.”

ALLISON: Right? Yeah, that's the kind of poetry that I like, personally.

LAURA: Do we think we should read some poetry now?

ALLISON: Okay, sure. So this is a little excerpt from a book called Articulations. And it was published by Counterpath in 2018, as part of Nick Montfort’s Using Electricity series. So I'll just read a tiny little bit of it. [reads]

Sweet hour of prayer, sweet hour of prayer it was the hour of prayers. In the hour of parting, hour of parting, hour of meeting hour of parting this. With power avenging. ... His towering wings; his power enhancing, in his power. His power. Thus: the blithe powers about the flowers, chirp about the flowers a power of butterfly must be with a purple flower, might be the purple flowers it bore. The petals of her purple flowers, where the purple aster flowered, here's the purple aster, of the purple asters there lives a purpose stern! A sterner purpose fills turns up so pert and funny; of motor trucks and vans and after kissed a stone, an ode after Easter. And iron laughter stirred, O wanderer, turn; oh, wanderer return. O wanderer, stay; O wanderer near. Been a wanderer. I wander away and then I wander away and thence shall we wander away, and then we would wander away, away O why and for what are we waiting. Oh, why and for what are we waiting, why, then, and for why are we waiting?

LEAH: Oh, my gosh. I'm, like, surprised at how moved I am by it.

LAURA: I know, me too!

LEAH: Which I'm embarrassed to even say.

ALLISON: Why are you embarrassed to say? I'm a professional poet. [laughs] It’s a compliment.

LEAH: Because, of course, I should be moved by it. And I shouldn't have any hesitation about that just because I know that it came from the machinations, if you will, of, you know, a robot.

ALLISON: Well, a computer program.

LEAH: A computer program.

ALLISON: I think it's useful to distinguish between those, but we don't have to get into that.

LEAH: No, good. Thank you. I appreciate that. [laughs]

LEAH: First of all, I think that the sounds were so beautiful. Like the way that the words kind of bounced off of each other and grew and developed from "wander" and "wonder" and from verb to gerund to noun in a really interesting way. I think the end stuck with me the most, the wandering, and I felt very much like it was someone or someones trying to find their way through something. Trying to find meaning in a kind of meaningless or confusing place, which sort of to me feels like what poetry is all about.

LAURA: And then there's a sort of, like, garden imagery, like, with the aster and everything. And so you kind of have this sense that—especially the way that the sounds are happening — there's like bouncing between like similar words — there's sort of a sense of like trying for something or like groping toward something. But I also don't really know what it's about.

LAURA: Okay, Allison, what's it about?

ALLISON: You're gonna be disappointed by me. [laughs] It's not about anything. There's no aboutness involved in the composition of this poem, really, in any way. In fact, it's specifically not about aboutness. The way that it was made is with a machine learning model of phonetics that I built, where essentially, it's a little bit of code that can take any sequence of words and turn them into a sequence of phonemes, like the constituent sounds and the words. So I took a big corpus of poetry from Project Gutenberg, which is a database available online of a lot of texts that are in the public domain. I wrote a program that found every line in, or every bit of text in Project Gutenberg that looks like its own line of poetry.

LAURA: It’s a bit complicated, at least for non-engineers like ourselves, but, basically, Allison’s program would map all of these lines based on how related they were to each other and then output them in sequential order. She ran the program to produce 20 alternative versions, picked her favorite one, and that became the book Articulations.

ALLISON: So there is actually explicitly no semantic information, no meaning in this, at all. And, I actually, tend to not read it thinking about what it means. Like it doesn't occur to me as a poet to look in it for meaning because, for me, it's purely about the pleasure of what happens in your mouth when you are pronouncing words, which is actually a tremendous pleasure. Like, it's fun to talk, and that's part of the reason it's fun to read poetry. Also, for that reason, because poetry does make your mouth do weird things. And this for me, it's all about that feeling.

LAURA: Leah and I are just—we're very rudimentary beings, and we're just constantly searching for meaning where there is none. So. … [laughs]

ALLISON: I don't think it's rudimentary at all. For me, when I took those standardized tests as a kid, reading comprehension was always my lowest score. [laughs] I think there's just something constitutionally about me. Like, I’m good at appreciating the surface level of texts, but, like, the meaning doesn't actually penetrate.

LAURA: So if the program is looking at a lot of other poetry, this is a concern for human poets as well? Is plagiarism a concern? Or would that not happen?

ALLISON: Yeah, it's absolutely a concern. And that's why I specifically use Project Gutenberg because the texts are all in the public domain. So there's no legal—I don't have any legal problems using this text. Because I have a right to use it. It's in the public domain.

ALLISON: The flip side of that, of Project Gutenberg being in the public domain, is that it almost all of the authors in there are dead, cis, white, American or British men, which means that the diction and the kinds of language are very limited and often reflect certain viewpoints about the world that I don't necessarily share. So there is sort of a double-edged sword. Like, I'm not technically plagiarizing, but I am kind of re-animating these voices in a way that I have to be careful about.

LAURA: So how would you describe like the ownership of this poem? Or is that just not even something that concerns you?

ALLISON: No, it's mine. I wrote it. I think that my authorship is very, very clear. [laughs] I wrote the computer program, right? I did a lot of work. And the work that I did is basically like: I had an idea for a poem in my head. And then I did a lot of typing. And then, at the end, there was a poem. So I don't know if that's different from regular, traditional ways of doing authorship. I don't diminish my agency over this process at all. And that goes both ways, right? I think that it's actually really important for artists and designers, makers, writers to take responsibility over their processes, right, because otherwise it gives you a way of weaseling out of responsibility for when things go wrong.

LEAH: So I think that there is kind of, I think there's a lot of fear around the idea of robots or computers or AI generating things that we think of as traditionally created by humans. So, you know, where does this fear come from? And how do you respond to it?

ALLISON: I don't know where the fear comes from. But it's very, very old. It predates computers by a long time, actually. [laughs] There's this thing that I found out when I was doing research for one of my classes last year, that, in France, in like the 1860s, somebody was saying that there was a novel that was written by a chair, Juanita, Nouvelle par une Chaise. And that was basically saying like this entire novel was written by a chair. And that's really surprising, and it has all of these implications for literature. And, if you look at that, and the reaction to it, it's almost the same thing as our reaction nowadays to like “an AI wrote this article,” right? This fear that this creative work is going to be taken out of our hands. So I don't know where it comes from. I think it just has to do with like the regular like capitalism issues of like, “I don't want my work to go away because then I won't have money.” I think it maybe also has to do with a fear about intention and creativity and thinking that creativity isn't actually real work or something like that. Or maybe just like shopping for it on the part of the people who say, who are like, “Well, you poets think you're so fancy. But it turns out a computer can do this. So I guess you're not so fancy after all.”

LAURA: I think about this a lot as a writer—that there's a lot of like elitism surrounding writing and something about doing it in conjunction with a computer or having a computer generate it is really, like, humbling I think to writers. [laughs]

ALLISON: I think that makes sense. I think for me, as someone on the other side of that—I primarily think of myself as a software engineer, right? That's what my actual professional background is. I only came to poetry indirectly, so I don't see it from that perspective at all. Like, I can see all of the labor that goes into producing these systems that generate poetry. And it's actually, like, an incredible amount of labor. When you look at one of these articles, like the one that was in the Guardian the other day, where the headline was like, “This article was generated by AI. Are you afraid yet?” was like literally the subhead was like, “Are you afraid yet?”

ALLISON: But when I look at that, what I see is like: It's not "an AI wrote this." It's first of all—OpenAI, the organization which employs many people, many engineers, many people who know a lot about language produced a system that can do this. It's based on a corpus of, you know, terabytes of text that were written by people. And then the people themselves at the Guardian, ran the program, fed it with a particular input, and then made choices about which of those outputs to include in the article. So saying that an AI wrote this article, to me, it's not humbling to writers at all. In fact, it's saying, like, "Look at, look at the combined effort. If writers work together, this is the thing that could happen, right?" But attributing it to AI is sort of like attributing the pyramids to the Pharaoh, right? Pharaoh didn't do that; the workers did. [laughs]

LAURA: It actually sounds like a lot more work than writing.

ALLISON: Yeah, it is, actually. And that's kind of the hidden secret of any of this stuff that has to do with AI is that it really is a way of obscuring labor. It's a way of, like, collecting labor, putting it into a box, and saying like, “No, nobody actually worked on this. So we don't have to pay anybody for this. We don't have to concern ourselves with the implications of all of the labor that went into making this thing happen. We can just say that the AI did it and, thereby, absolve ourselves of all responsibility for what actually happened.”

LAURA: Should we read another one, or Leah do we have enough time for that?

LEAH: I would love to.

LAURA: For this poem, Allison used the same program she used for Articulations, but this time she mapped the relationship between individual words and found the “midpoint” between each pair.

ALLISON: And the examples that I give this are like, between “paper” and “plastic” is the word peptic: paper, peptic, plastic. Between "kitten" and "puppy" is the word committee: kitten, committee, puppy. You can see that committee sort of sounds like both "kitten" and "puppy." Between "birthday" and "anniversary" is perversity: birthday, perversity, anniversary. And then between "artificial intelligence" is ostentatious: artificial, ostentatious, intelligence.

ALLISON: So one piece that I wrote with this algorithm I call “the Interpolated ABCs.” So this is the ABCs but filling in the gaps between the letters. So it goes like this:

a, aah, b, beatie, beachy, c, cd, ddt, deedee, d, addie, e, a, aah, f, sffed, jeffe, effigy, vecci, g, edgy, geddie, giuseppe, edyth, h, ich, eighth, aah, i, aah, ooh, aday, j, che, k, katey, cail, l, mtel, m, airmen, n, aw, o, aw, ooh, p, kyowa, kewaunee, q, ru, r, s, essie, tessie, techy, t, yao, hew, u, hew, yoy, venue, v, dubey, w, acceptable, detectable, extendable, adaptec's, x, equitex, ex-wife, y, yeah, they, z

ALLISON: ... And now you know your interpolated ABCs. [laughs]

[LAUGHS]

LEAH: That just brings me so much joy. I was, like, containing my giggles.

ALLISON: Well, thank you. I assume that's a good thing.

LEAH: Yes, I think so.

LAURA: Joy is always a good thing. In these joyless times, we love them.

ALLISON: That is true, that is true.

LEAH: So, okay, I know you said that these poems aren't necessarily about something in the way that I and Laura conceive, but I'm thinking, when I think of, I'm not going to use "traditional" but you said kind of—what was the word you used?—intentional poetry created, you know, organically, just by a human. I feel like a big part of poetry is about communicating and telling a story, or reaching other humans expressing a human experience, and if that's not the case with, you know, computer program generated poetry, what is it for?

ALLISON: I mean, I think I would disagree with the premise that that is what poetry is about, actually. [laughs] I think that that's what storytelling is about. I think that that's what, you know, particular genres of literature are about. And this might be an unpopular thing to say about poetry, but, in my own internal taxonomy of poetry, poetry is specifically the kind of literature that isn't necessarily concerned with telling a story. Poetry is the genre of literature where it is about the way that we relate to language and how language helps us relate to one another. And not necessarily about, about the idea of telling the story. And that's where I see computer-generated poetry. Not that you can't tell stories with computer-generated things, right? But I think that the relationship that happens with computer-generated poetry is the relationship of like, you know, “I made a thing, and I'm sharing it with you.” [laughs] Like it's an exploration of the form and its exploration of what our what our eyes and our mouths and our ears and our bodies do in response to this weird thing called "language."

[MUSIC]

LEAH: I think what Allison is doing is pretty cool and innovative. Like, she’s using computer programs and artificial intelligence as tools to express her own creativity. But, you know, you just can’t get away from the fact that it’s kind of hinting at this future in which robots play a bigger and bigger role in things like creative writing. And, I don’t know, that’s not something that I feel super comfortable with.

LAURA: Yeah, and, in a way, it’s the same with Liam’s prank. Like, yes, it’s really cool that a robot wrote a self-help article that was convincing enough to fool a bunch of human readers. But, as he said, it seems like something that, if not carefully controlled, could lead to some creepier or maybe even dangerous uses.

LEAH: Yeah, and I think what scares me is the idea that we could one day lose track of this distinction between human and machine. And—I don’t know—maybe writers like us could even become … like obsolete? What do you think, Pat?

PAT: We should be able to differentiate between the robot and ourselves. But if we erased that difference, well then there we’re in a whole, new world.

LAURA: Yeah, a world where Leah and I are selling our human-written stories on Etsy. Or antiques.com.

PAT: You know the story of John Henry, right? I know it is a song––we used to have to sing it in school.

LEAH: You’re gonna have to sing it.

LAURA: Yeah, sing it right now.

PAT: [sighs] You promise it won’t go on the show? [laughs]

LAURA: Might go at the end, but just go on.

PAT: Uh, it goes: “John Henry was hammering on the mountain / There was fire in his eyes / And he drove so hard that he broke his heart. / He laid down his hammer and he died. / And he laid down his hammer and he died.” And then I would always do this train sound [woo-woo-wooo]. [laughs] It always needed the train sound at the end.

LEAH: [laughs] That was a really good train.

PAT: But you know that John Henry’s up against the steam hammer, right? So his job is, like, laying down track, driving the stakes—I guess is what you call them—to hold down the railroad tracks, right? But they invented a machine that could do it. And, you know, it was a point of pride for John Henry to beat that machine. And he doesn’t. He dies trying; the machine wins. And, now, the question I always had was, why does John Henry want to keep doing that? Right? And yet, John Henry’s, there’s something heroic, you got to admit, like he’s fighting this machine. And so … maybe there will be a day when the best literature in the world is written by super intelligent computers, and there’s … nothing left for us to do. Are we going to be like John Henry?

[MUSIC IN: “THE BALLAD OF JOHN HENRY”]

LAURA: This isThe Edge, brought to you by California magazine and the Cal Alumni Association. I’m Laura Smith.

LEAH: And I’m Leah Worthington.

LAURA: This episode was produced by Coby McDonald, with support from Pat Joseph. Special thanks to Liam Porr, Allison Parrish, Brooke Kottmann, and California magazine interns Maddy Weinberg, Boyce Buchanan, and Dylan Svoboda. Original music by Mogli Maureal.

PAT: I can do the train sound again for ya.

LEAH & LAURA: Do it one more time.

PAT: Woo woo woo!

[MUSIC OUT]