“We turned the switch and saw the flashes,” said physicist Leo Szilard, describing his 1942 experiment that created the first controlled nuclear chain reaction. “We watched them for a little while and then turned everything off and went home. That night, there was very little doubt in my mind that the world was headed for grief.”

The quote is one that UC Berkeley computer science professor Stuart Russell likes to share when giving talks about the future of artificial intelligence (AI). Like nuclear power before it, AI holds great transformative promise, he says. “If we build super-intelligent machines, it would—arguably—be the biggest event in the history of the human race.” Unfortunately, he also warns, when it comes to anticipating the risks posed by new technologies, we humans “don’t have a great track record.”

Russell has devoted his career to making machines smarter, “because smart computers are better,” he says, “and dumb computers are annoying—as we all know.” A professor at UC Berkeley since 1986 and former chair of the electrical engineering and computer science department, he has worked on such robot-enlightening topics as metareasoning, the collision of logic and probability, and inverse reinforcement learning.

Up until recently, Russell explains, the main focus of AI research has been to build intelligence without regard to repercussions. Now, spurred by accelerating advancements in the field, a growing number of prominent businesspeople and scientists, including Bill Gates, Elon Musk, and Stephen Hawking, have declared that the risks posed by AI are real, significant, and need to be addressed now.

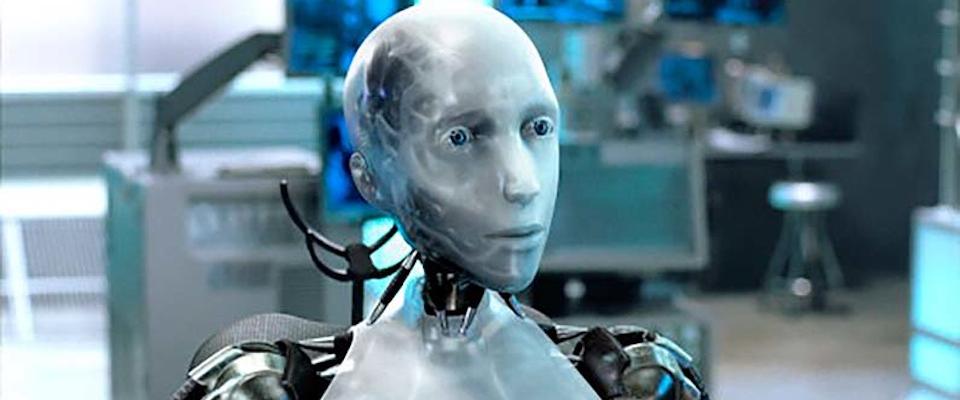

At issue is what’s known as “the singularity”—the moment when machines usurp our comfortable seat atop the intelligence pyramid. Some futurists view this event as cause for celebration; super-intelligent robots could one day solve the pressing problems of our time and take over tasks we abhor. But there are reasons to worry. For example, consider a machine that “doesn’t want to be switched off, doesn’t exactly share your values, and is much more intelligent than you are,” Russell offers. “It’s pretty clear that’s a problem.”

With world militaries funding research into autonomous weapons, human extermination by misanthropic robots seems creepily plausible—but machines wouldn’t necessarily have to be malevolent to be harmful. Oxford University philosopher Nick Bostrom recently posited one such hypothetical scenario: a super-intelligent paper-clip manufacturing robot that discovers an efficient way to turn everything into a giant paperclip factory—the whole world, in fact.

The key to ensuring human-friendly AI, Russell believes, is developing machines that share our values. Ultimately, machines may learn values much the way we do, through observation.

“There’s a huge amount of information available about how humans behave and how they value behavior,” Russell says, “because everything we write about, every newspaper story, novel, and movie are about what people do and what people think about what those people do.” If we develop machines that can understand those materials, he suggests, “a system could learn a lot about what humans value.”

In the past, many scientists in the AI field have been dismissive of doomsday prognostications, viewing them as uninformed and overblown, says Russell. But “the community is starting to understand that we need to take ourselves more seriously and recognize that artificial intelligence can have a big impact on the human race.”

Although most estimates put human-level AI at several decades away, Russell believes that procrastination will risk repeating the mistakes of the past.

“If someone said a 50-mile-wide asteroid is going to hit the Earth in 75 years’ time, you wouldn’t say, ‘Oh well, we don’t need to worry about it now. Come back when it’s a few miles away.’ You would start working on solutions right now.”