For many Americans, Donald Trump’s 2016 victory came as a shock, especially considering how much he’d trailed Hillary Clinton in the polls. Even FiveThirtyEight founder and famed pollster Nate Silver got it wrong. But UC Berkeley business professor Don Moore thinks we should cut Silver some slack.

“He got some flack for his forecasts that Hillary would win with 70 percent probability,” says Moore. “Should we be outraged that an outcome occurred, Trump winning the election, that Silver only gave a 30 percent probability? No, things with 30 percent probability happen all the time. If you think there’s a 30 percent chance of rain tomorrow, there’s a good chance you’re gonna bring your umbrella.”

“It’s really important to remember that these polls are not forecasting who’s going to win,” says Don Moore. “They are an estimate of likely vote share.”

A professor at the Haas School of Business, Moore studies confidence and the degree to which statistical confidence judgements are well-calibrated. “What we find systematically is that people’s confidence intervals are too narrow,” says Moore. “That is, when I ask for a 90 percent confidence interval, they give me a range,” like plus or minus three percent, for example. “How often does the truth fall inside that range? Substantially less than 90 percent. Often down around 50 percent of the time.”

When his undergraduate apprentice, recent grad Aditya Kotak, noted that most political pollsters report 95 percent confidence levels, Moore decided to investigate whether they suffer from the same overconfidence.

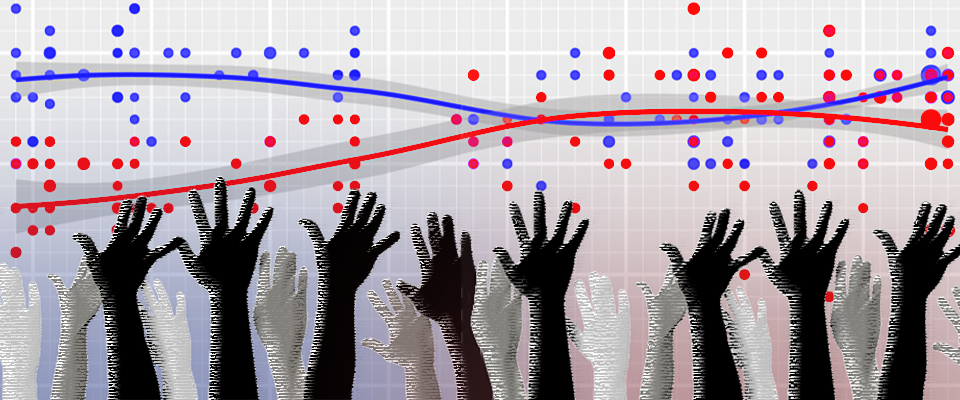

By analyzing data from over 1,400 polls from 11 election cycles, Moore and Kotak found that only 60 percent of polls conducted the week before an election include the actual outcome.

To many, 60 percent accuracy sounds bad, especially for something as important as presidential election results. But these findings might not be as discouraging as they seem. “It’s really important to remember that these polls are not forecasting who’s going to win. They are an estimate of likely vote share,” meaning the percentage of total votes that one candidate receives, Moore explains. “So this is about whether the truth falls inside the confidence interval, not whether the poll calls the eventual winner.”

A standard election poll, with a 95 percent confidence interval and a sample size of 1,000 people, estimates the vote share that a candidate is likely to receive plus or minus three percentage points. If, for example, a poll predicts that Joe Biden will get 56 percent of the popular vote, but in reality he only gets 52 percent, then the outcome would fall outside the poll’s three percent margin of error. While the general public might consider the poll a success, given that it correctly predicted that the candidate would win the majority, Moore’s study would count it as a failure.

“Being able to anticipate the outcome of an election is very useful, even if you can only do so imperfectly,” says Moore. “Just like anticipating whether it’s going to rain tomorrow.”

Confidence intervals that most political pollsters report only account for statistical sources of error, like sampling issues. Other non-statistical sources of error could explain the disparity between polls’ 95 percent confidence and their 60 percent accuracy. Gabriel Lenz, a Berkeley professor specializing in American politics and political behavior, says that one polling technique that could be the culprit is herding, a process in which pollsters adjust their findings to more closely resemble those of other recent polls and avoid reporting outliers. According to Lenz, likely voter screenings—a series of questions that pollsters ask in order to determine whether someone is likely to vote and therefore whether they ought to be included in an election survey—may also introduce error, as some percentage of those determined to be unlikely to vote often end up voting anyway.

How can pollsters do better? “Expand the confidence intervals they report,” says Moore. “The truth is that pollsters who communicate their polls relying solely on statistical sources of error will underestimate the actual error in their polls and therefore provide misleading confidence intervals.” According to his analysis, pollsters would have to double the margins of error that they report in the week leading up to election day to be 95 percent confident. This means that published poll results would need to provide a candidate’s estimated vote share plus or minus six percentage points, rather than the usual three, in order to capture the actual election result 95 percent of the time.

For now, we can’t count on election polls to be as accurate as their confidence levels suggest. So do they still have value? “Certainly,” says Moore. “Just being able to anticipate the outcome of an election is very useful, even if you can only do so imperfectly. Just like anticipating whether it’s going to rain tomorrow, or where the hurricane is going to land is very useful information, even if your forecasts aren’t spot on.”

Instead of obsessing over polls, Gabriel Lenz suggests that concerned voters find more constructive ways to spend their time.

Lenz agrees. Even with their imperfections, polls still offer valuable insight. For instance, they can help give us a sense of whether election results are right. If an election outcome were to diverge massively from polling predictions, we would likely suspect voter fraud. Polls can also help politicians learn what voters expect from them. “In this case, the lesson might be that voters really want the president to do a good job managing a pandemic. Being able to look at polls and see that message is really important for democracy,” says Lenz. “It’s important for politicians to learn from elections. One of the main ways that we can help them do that is to conduct polls––and they don’t need to be perfectly accurate for that.”

With the 2020 election just around the corner, instead of obsessing over polls, Lenz suggests that concerned voters find more constructive ways to spend their time. “Political junkies get into polls, like it’s a sports game or something,” he says. “They could probably use that time to try to convince other people to change their votes, or try to get other people to vote to increase overall turnout.”