It started with a conversation. About two years ago, Claudia von Vacano, executive director of UC Berkeley’s social science D-Lab, had a chat with Brittan Heller, the then-director of technology and society for the Anti-Defamation League (ADL). The topic: the harassment of Jewish journalists on Twitter. Heller wanted to kick the offending trolls off the platform, and Vacano, an expert in digital research, learning, and language acquisition, wanted to develop the tools to do it. Both understood that neither humans nor computers alone were sufficient to root out the offending language. So, in their shared crusade against hate speech and its malign social impacts, a partnership was born.

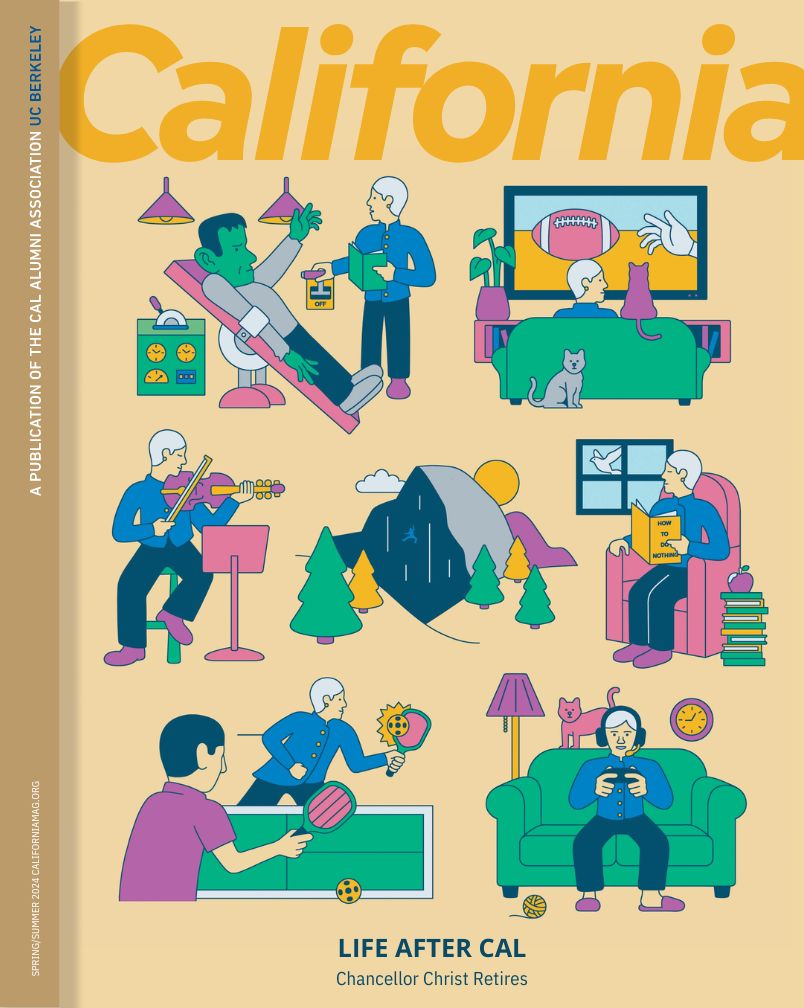

Developers anticipate major social media platforms will use the Online Hate Index to recognize and eliminate hate speech rapidly and at scale.

Hate speech, the stinking albatross around the neck of social media, has become increasingly linked to violence, even atrocity. Perhaps the most egregious recent example: Robert Bowers, the accused shooter in the October massacre at Pittsburgh’s Tree of Life Synagogue, was reportedly inflamed by (and shared his own) anti-Semitic tirades on Gab, a platform popular with the Alt-Right that was temporarily deactivated following the shooting.

Currently, Facebook and Twitter employ thousands of people to identify and jettison hateful posts. But humans are slow and expensive, and many find the work emotionally taxing—traumatizing, even. Artificial Intelligence and machine learning are the obvious solution: algorithms that can work effectively at both speed and scale. Unfortunately, hate speech is as slippery as it is loathsome. It doesn’t take a very smart AI to recognize an overtly racist or anti-Semitic epithet. But more often than not, today’s hate speech is deeply colloquial, or couched in metaphor or simile. The programs that have been developed to date simply aren’t up to the task.

That’s where Vacano and Heller come in. Under Vacano’s leadership, researchers at D-Lab are working in cooperation with the ADL on a “scalable detection” system—the Online Hate Index (OHI)—to identify hate speech. The tool learns as it goes, combining artificial intelligence, machine learning, natural language processing, and good old human brains to winnow through terabytes of online content. Eventually, developers anticipate major social media platforms will use it to recognize and eliminate hate speech rapidly and at scale, accommodating evolutions in both language and culture.

“The tools that were—and are—available are fairly imprecise and blunt,” says Vacano, “mainly involving keyword searches. They don’t reflect the dynamic shifts and changes of hate speech, the world knowledge essential to understanding it. [Hate speech purveyors] have become very savvy at getting past the current filters—deliberately misspelling words or phrases.” Current keyword algorithms, for example, can be flummoxed by something as simple as substituting a dollar sign ($) for an “S.”

“We are developing tools to identify hate speech on online platforms, and are not legal experts who are advocating for its removal,” says Vacano.

Another strategy used by hatemongers is metaphor: “Shrinky Dinks,” for example, the plastic toys that shrink when baked in an oven, allude to the Jews immolated in the concentration camps of the Third Reich. Such subtle references are hard for current AI to detect, Vacano says.

The OHI intends to address these deficiencies. Already, their work has attracted the attention and financial support of the platforms that are most bedeviled—and that draw the most criticism—for hate-laced content: Twitter, Google, Facebook, and Reddit.

But no matter how well intentioned, any attempt to control speech raises Constitutional issues. And the First Amendment is clear on the matter, says Erwin Chemerinsky, the dean of Berkeley Law.

“First, the First Amendment applies only to the government, not to private entities,” Chemerinsky stated in an email to California. “Second, there is no legal definition of hate speech. Hate speech is protected by the First Amendment.” Unless it directly instigates violence, that is, an exception upheld in the 1942 Supreme Court decision, Chaplinksy v New Hampshire.

In other words, the platforms can decide what goes up on their sites, whether it’s hateful or not. Vacano acknowledges this reality: D-Lab, she says, isn’t trying to determine the legality, or even appropriateness, of moderating hate speech.

“We are developing tools to identify hate speech on online platforms, and are not legal experts who are advocating for its removal,” Vacano stated in response to an email query. “We are merely trying to help identify the problem and let the public make more informed choices when using social media.” And, for now, the technology is still in the research and development stage.

“We’re approaching it in two phases,” says Vacano. “In the first phase, we sampled 10,000 Reddit posts that went up between May through October of 2017. Reddit hadn’t implemented any real means for moderating their community at that point, and the posts from those months were a particularly rich trove of hate speech.”

D-Lab initially enlisted ten students of diverse backgrounds from around the country to “code” the posts, flagging those that overtly, or subtly, conveyed hate messages. Data obtained from the original group of students were fed into machine learning models, ultimately yielding algorithms that could identify text that met hate speech definitions with 85 percent accuracy, missing or mislabeling offensive words and phrases only 15 percent of the time.

Though the initial ten coders were left to make their own evaluations, they were given survey questions (e.g. “…Is the comment directed at or about any individual or groups based on race or ethnicity?) to help them differentiate hate speech from merely offensive language. In general, “hate comments” were associated with specific groups while “non-hate” language was linked to specific individuals without reference to religion, race, gender, etc. Under these criteria, a screed against the Jewish community would be identified as hate speech while a rant—no matter how foul—against an African-American celebrity might get a pass, as long as his or her race wasn’t cited.

Vacano emphasizes the importance of making these distinctions. Unless real restraint is exercised, free speech could be compromised by overzealous and self-appointed censors. D-Lab is working to minimize bias with proper training and online protocols that prevent operators from discussing codes or comments with each other. They have also employed Amazon’s Mechanical Turk—a crowdsourcing service that can be customized for diversity—to ensure that a wide range of perspectives, ethnicities, nationalities, races, and sexual and gender orientations were represented among the coding crew.

So then, why the initial focus on Reddit? Why not a platform that positively glories in hate speech, such as the white nationalist site Stormfront?

“We thought of using Stormfront,” says Vacano, “But we decided against it, just as we decided against accessing the Dark Web. We didn’t want to specialize in the most offensive material. We wanted a mainstream sample, one that would provide a more normal curve, and ultimately yield a more finely-tuned instrument.”

With proof of concept demonstrated by the Phase 1 analyses of Reddit posts, Vacano says, D-Lab is now moving on to Phase 2, which will employ hundreds of coders from Mechanical Turk to evaluate 50,000 comments from three platforms—Reddit, Twitter, and YouTube. Flags will go up if pejorative speech is associated with any of the following rubrics: race, ethnicity, religion, national origin or citizenship status, gender, sexual orientation, age or disabilities. Each comment will be evaluated by four to five people and scored on consistency.

Vacano expects more powerful algorithms and enhanced machine learning methods to emerge from Phase 2, along with a more comprehensive lexicon that can differentiate between explicit, implicit, and ambiguous speech in the offensive-to-hate range. Ultimately computers, not humans, will be making these distinctions––a necessity given the scope of the issue. But is that cause for concern?

Erik Stallman, an assistant clinical professor of law and the faculty co-director of the Berkeley Center for Law and Technology, says that, because of the ubiquity of social media, attempts to moderate online hate speech are based on sound impulses.

Humans will always have a role in developing AI, says Vacano. Computers and programs, after all, don’t hate. That’s a uniquely human characteristic.

“And considering the scale at which [the platforms] operate, automated content moderation tools are a necessity,” Stallman says. “But they also have inherent limitations. So platforms may have hate speech policies and still have hateful content, or they may have standards that aren’t well defined,” meaning posts are improperly flagged or users are unfairly excluded from the site.

In any attempt to control hate speech, says Stallman, “the community of users should have notice of, and ideally, input on the standards. There should also be transparency—users should know the degree of automated monitoring, and how many posts are taken down. And they should also have some form of redress if they feel their content has been inaccurately flagged or unfairly taken down.”

Stallman also cited a Center for Democracy report that noted the highest rate of accuracy for an automated monitoring system was 80 percent.

“That sounds good, but it still means that one out of five posts that was flagged or removed was inaccurate,” Stallman says.

(As noted, D-Lab’s initial Online Hate Index posted an accuracy rate of 85 percent, and Vacano expects improved accuracy with Phase 2’s increased number of coders and expanded vocabulary.)

Unsurprisingly, D-Lab has been targeted by some haters.

In any attempt to control hate speech, says Stallman, there should be transparency.

“So far it’s only amounted to some harassment by ignorant commenters,” says Vacano. “But yes, there is some calculated risk [e.g., doxxing or stalking of D-Lab members] involved. It’s a sad and difficult issue, but we’re a highly motivated group, and what’s particularly exciting for me is that we’re also a deeply interdisciplinary group. We have a linguist, a sociologist, a political scientist and a biostatistician involved. David Bamman, an expert in natural language processing and machine learning, is contributing. I’m in educational policy. It’s rare to have this much diversity in a research project like this.”

Though D-Lab’s hate speech products will be founded on sophisticated AI and machine learning processes, humans will always have a role in their development, says Vacano. Computers and programs, after all, don’t hate. That’s a uniquely human characteristic, and only humans can track hate’s rapidly evolving and infinitely subtle manifestations. The code phrase Shrinky Dinks may be flagged as potentially hateful today, but the lexicon of hate is always changing.

“We’ll need to continually update,” Vacano says. “Our work will never be done.”

**CORRECTION: This article previously stated that was Gab was a deactivated platform. It has since been reactivated.