When considering The End—the Apocalypse, Armageddon, the Big Crunch, Kingdom Come, Ragnarök, the Big Freeze, the Big Rip, the Final Period, Alpha and Omega, rivers of blood, premium cable, whatever you want to call it—it’s worth bearing in mind the last time we had a real contender.

The Black Death. Now there’s an apocalypse you could sink your teeth into, provided you weren’t pinned to the floor in agony, covered in suppurating pustules and vomiting blood. There had been outbreaks of plague for a thousand years prior, but nothing compared to what happened to medieval Europe between 1346 and 1353, and especially 1348.

Take this account from that awful year, written by a chronicler in Siena: “And I, Agnolo di Tura, called the Fat, buried my five children with my own hands. And there were also those who were so sparsely covered with earth that the dogs dragged them forth and devoured many bodies throughout the city. There was no one who wept for any death, for all awaited death. And so many died that all believed that it was the end of the world.”

In the space of a couple of years, the population of Europe by some estimates dropped from 75 million to 50 million. Think of it: 25 million—a third of the population—all dead. Yet, when this unimaginable catastrophe happened, the world did not end.

Europe bounced back within a couple of centuries, and the average peasant was better off for the labor shortage. Humanity went on to survive a few more horrific but comparatively minor bouts with the plague, and then, with the advent of antibiotics, put Yersinia pestis out of mind. Oh, sure, it still exists in wild reservoirs in places like the mountains and foothills of California, but hey, when was the last time you worried about the Black Death of Tehachapi?

You don’t. And you won’t. So long as our antibiotics remain effective.

Because here are three mostly encouraging lessons about the end of the world:

- It probably isn’t literally the end of the world.

- Humanity is surprisingly resilient and adaptable.

- If the end can’t be avoided, it might at least be postponed.

In support of that thesis, consider a few of the apocalypses forecast for the last half-century.

Start with the population bomb. When Paul and Anne Ehrlich wrote the famous book with that title in 1968, they revived the Malthusian terror that the rapid growth of the human population will outstrip our ability to feed ourselves, resulting in famine and wars, or as Malthus himself would have put it, “misery and vice.”

(The Reverend Thomas Robert Malthus was concerned with imminent overpopulation in the 19th century, particularly amongst the “lower classes of society.” Scarcity, in his view, was God’s way of improving Man. Malthus was against aid to the poor on the grounds that it encouraged them to have children, and in favor of taxing imported wheat so as to make food more expensive and therefore children, as well. Overall, he thought the best course of action for the poor was sexual abstinence followed by late marriage. Among the fictional characters inspired by Malthus is Ebenezer Scrooge.)

The Ehrlichs predicted that millions upon millions would die of starvation in the ’70s and early ’80s. To prevent subsequent famines, it being too late to stop the coming one, they argued that humanity would have to achieve zero or negative population growth. To do so, they proposed such solutions as improved contraception, sterilization, and even a “luxury tax” on diapers. Furthermore, in the Ehrlichs’ prescription for the future, food aid to foreign countries should be contingent upon their adopting similar policies. Harsh? Maybe, but only thus could disaster be avoided.

Except it didn’t happen as foreseen by the Ehrlichs. New crop varieties, fertilizers, pesticides, and the mechanization of agriculture have enabled humanity to feed more than 3 billion additional people since 1968. India, which the Ehrlichs initially wrote off as all but doomed, has avoided major famine while more than doubling its population.

We might not know what Earth’s carrying capacity is until we’ve soared past it—and have to do our math backward while dining on shoelaces, crickets, and filet of neighbor.

Warnings of catastrophic scarcity are still with us, of course. Current dire predictions include that we’ll run out of fish, that we’ll run out of topsoil, and that we’ll run out of bees. And these are all real worries. The oceans are overfished and are growing warmer and more acidic. We could face oceans dominated by slime, jellyfish, and the dreadful squid who may rise up and become our cephalopodic overlords, battering and frying all who disobey. Some estimates say we’ve lost half of the planet’s topsoil in just the last 150 years. If we can’t arrest the decline of bee colonies, many of the plants we rely on for food won’t be able to bear fruit.

But there are solutions to all these problems. The oceans are complicated, but there is hope in the form of better treaties to govern fishing and pollution. Topsoil can be retained and improved with better farming practices and by slowing deforestation. As for the bees, we’ve identified the likely class of pesticides that’s causing the problem. The hope is that regulation will come soon.

My favorite scarcity worry is helium. Seriously. Formed as it is through millions of years of radioactive decay, there’s only a finite supply of it in the planet; and when it’s free in our atmosphere, helium is so light it escapes into space. Yet, in the 1990s, Congress ordered that the U.S. National Helium Reserve be drawn down and closed, shutting down 35 percent of world supply. Congress reversed that decision last year. That’s good news.

Due to its inertness, helium is extremely useful in a wide variety of industrial processes, such as purging rocket fuel lines and cooling MRI machines and particle accelerators. The isotope helium-3 is a potential fuel for fusion reactors, should we ever work those out. Of course, given its scarcity, helium should be more expensive. Imagine if we discover powerful and clean energy only to realize that we’ve squandered the key ingredient on party balloons and squeaky voices.

Helium scarcity: Worry about it before it’s cool.

But the biggest scarcity scare right now is Peak Oil—the idea that someday soon (if it hasn’t happened already) we’ll have reached the limits of global oil production. As supplies dwindle, prices will rise, leading to political instability—before long, it’s resource wars, the collapse of the modern state, and some combination of Amish life and Mad Max. It’s hard not to sympathize with such an idea when you’re standing at a Bay Area gas pump, paying north of $4 a gallon, wondering if you could cut it as a wily gyrocopter pilot. But there’s a decent chance you won’t ever have to. Electric cars and hybrids are becoming more common, and average gas mileage is increasing even in regular cars. Advances are being made in biofuels, and more and more renewable energy is being generated every year.

And finally, melting ice caps are opening up new oil reserves to be exploited. That’s a colossal irony, of course, given the clear connection between fossil fuels and global warming, which many believe may be the greatest existential threat currently facing humanity. In that sense, it’s not the scarcity of coal, oil, and gas we should fret about, but the abundance of it. Burn it all, say the climate experts, and we’re cooked. In general, economists tend to see scarcity as a prod to human innovation. Rising costs encourage us to do more with less, or to find alternative means to an end. Flattening farm yields have led to new crops and better fertilizers. Higher water prices encourage drip irrigation. The dwindling supply of trees brought Europe to begin heating with coal.

The rejoinder to that kind of optimism, however, is that someday the Malthusians may well be proved right. Just because we haven’t yet reached the carrying capacity of Earth doesn’t mean there isn’t one. Intuitively we know there must be, and yet we might not know what the limit is until we’ve soared past it and have to do our math backward while dining on shoelaces, crickets, and filet of neighbor.

But let’s not dwell on that.

If you want a ray of light when it comes to humanity’s potential to save itself from certain doom, consider the hole in the ozone layer, which, you may you recall, threatened to turn sunlight into carcinogenic death rays from outer space.

The ozone layer protects Earth by shielding it from much of the sun’s ultraviolet radiation. And, unwittingly, we were destroying it. The hole was caused by chlorofluorocarbons, or CFCs, which had been originally developed as an inert replacement for the toxic gases used in early refrigeration systems. CFCs proved so safe and effective that they made possible cheap air conditioning—and spray cans. It was a triumph of chemistry.

Or so it seemed, until a scientist named Mario Molina, who incidentally earned his chemistry Ph.D. at UC Berkeley in 1972, joined forces with UC Irvine professor F. Sherwood “Sherry” Rowland to examine what happened to CFCs in the atmosphere. At first, the research didn’t seem particularly interesting. CFCs were, after all, inert. But Molina and Rowland discovered that when the compounds were broken down by solar radiation in the upper atmosphere, the chlorine released into the stratosphere ripped apart ozone molecules. In 1974, Molina and Rowland published a paper outlining all this in Nature.

Humanity decided it didn’t want to die for hairspray. Good for us.

There was, as you can imagine, significant resistance to these findings on the part of consumers—and especially by the chemical industry. The chairman of DuPont, which sold CFCs under the brand name Freon, took out a full-page newspaper ad decrying the vilification of such a useful product. Molina and Rowland persisted, speaking at conferences, giving interviews to the press, and testifying at legislative hearings. As the evidence grew that there was in fact a giant hole in the ozone layer, people started to worry and politicians took notice. Consumers boycotted spray cans. Nations passed laws limiting CFCs. And then in 1987, 46 nations negotiated and soon ratified the Montreal Protocol, pledging to reduce CFCs and related chemicals by 50 percent within 12 years. The initiatives proved so effective that subsequent revisions have changed that goal to 100 percent reduction within a decade. By now, 197 countries have joined in this pledge. It’s projected that the ozone layer will heal by 2050.

Humanity decided it didn’t want to die for hairspray. Good for us.

It wasn’t the only bullet we’ve dodged. Certainly the greatest source of dread in the late 20th century was the war that never happened—in the immortal words of Major T.J. “King” Kong (Slim Pickens) in Stanley Kubrick’s Dr. Strangelove, “nuclear combat toe-to-toe with the Rooskies.”

After 1945, the United States of Imperialist Warmongers and the Soviet Union of Godless Red Bastards built something like 125,000 atomic weapons and aimed most of them at each other. For the generations following Hiroshima and Nagasaki, World War III seemed imminent, even inevitable. The world would end under a mushroom cloud.

The scientists who built the first atomic bomb and witnessed its first detonation surely believed in its apocalyptic power. Robert Oppenheimer (point of local pride: a Berkeley physics professor) directed the Manhattan Project and said that watching the atomic fire of the Trinity test caused him to remember a line from the Bhagavad Gita: “I am become death; the destroyer of worlds.” Kenneth Bainbridge, who ran the test, was a tad less grandiose. He turned to his peers and said, “Now we are all sons of bitches.”

At the height of the Cold War, we had nukes in planes above the Arctic Circle, nukes in tractor trailers, nukes perched atop rockets buried in farm fields, and nukes in submarines that skulked off each other’s coasts in a constant state of readiness. We even had nukes you could shoot out of howitzers, and nukes as landmines. These belonged to what we called our “tactical arsenals,” a name that implied we might fancy a light thermonuclear exchange without wanting to, you know, make a Thing out of it.

Tactical nukes notwithstanding, the guiding (if not official) policy of both sides was Mutual Assured Destruction, or MAD. Officially, the U.S. liked to maintain that it could control the size of a nuclear war, and the USSR said it planned on surviving an all-out nuclear war. Both sides promised to annihilate the other if attacked. That may itself sound mad, and it is. But insanity was part and parcel of a credible nuclear deterrent; you had to hope the other guy wasn’t crazy enough to launch a first strike while at the same time convincing him that you were crazy enough to strike at the slightest provocation. Lucky for us, neither country ever dared, in the words of Randy Newman, to “drop the big one and see what happens.”

And what would have happened? Well, at the low end, the expected result was several megadeaths (a megadeath was a unit of measure coined in the atomic age to represent one million dead). At the high end, billions of dead. And that’s before you start worrying about nuclear winter—the idea that a nuclear exchange would throw millions of tons of soot into the atmosphere, resulting in falling temperatures, crop failures, and perhaps an instant ice age. The sliding scale of nuclear destruction starts at cities, moves up to nations, to all of civilization and perhaps to the entire species, leaving Earth to its true inheritors, the cockroaches.

But here’s the really fantastic news about nuclear annihilation: It hasn’t happened yet.

The fact is, we’ve had our hands on Shiva’s Tinker-Toys for nearly 70 years and we’re still here. If you think about the great and terrible horrors humans have perpetrated on each other throughout history, it’s remarkable that we’ve refrained for decades from fusion-powered global slaughter. So, maybe there’s hope yet.

The Bulletin of the Atomic Scientists is still worried, though—worried about the 5,500–22,400 nuclear weapons still existent, not to mention humanity’s lack of will in facing global climate change. Since the dawn of the nuclear age, the Bulletin has kept an index of civilizational perils it calls the Doomsday Clock. The time on the clock changes every few years based on the Bulletin’s risk assessment. In 1949, after both the U.S. and the USSR built their first hydrogen bombs, the clock was moved to 11:57. When the Cold War ended in 1991, the clock was reset to 11:43. Its current setting is also the Bulletin’s registered trademark: “It is 5 minutes to midnight.”

In other words, “Not yet.”

And, in the end, isn’t that the nicest thing you can say about the apocalypse?

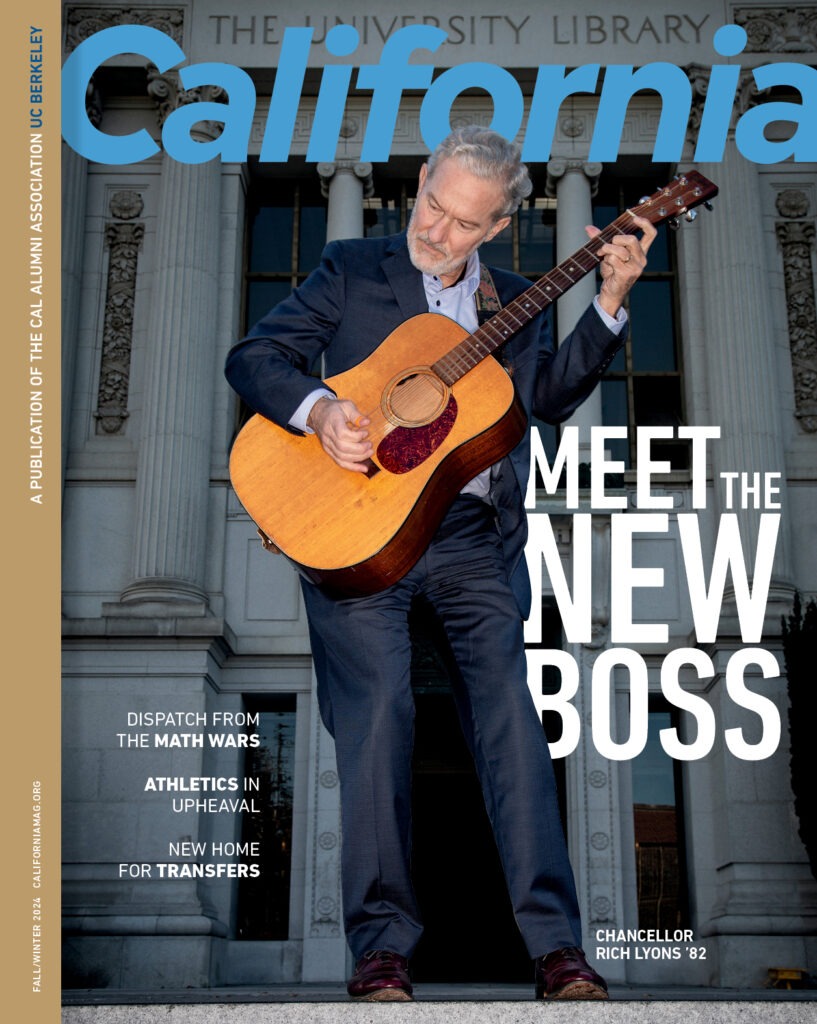

Brendan Buhler is a frequent contributor to California and interim Science Editor for this issue.

From the Summer 2014 Apocalypse issue of California.