Hear the letters BMI and the first thing you probably think of is “body mass index.” Keep your eyes peeled because “brain-machine interfaces” could soon hijack more than just the acronym.

Jose Carmena was in a mid-Ph.D. crisis studying robotics at the University of Edinburgh when Miguel Nicolelis, John Chapin, and colleagues from Duke University and the MCP Hahnemann University School of Medicine published a paper showing that lab rats could control a simple robotic device using brain activity alone—and an implant. It was 1999, and marked the beginning of BMI as a field. Carmena never looked back. Born into a family of physicians, he’d finally found a field that married his intellectual interest in robotics and the brain to his personal ones. BMIs had a very clear clinical application: creating better neuroprosthetic devices for people with movement disabilities.

Carmena is now professor of electrical engineering and neuroscience at Berkeley and co-director of the Center for Neural Engineering and Prostheses at Berkeley and UCSF. His BMI research explores fundamental questions such as “How do you connect the brain to a machine?” “How can we turn thought into action?” “Or sensation into perception?” One day, BMIs could enable patients with prosthetic hands to braid hair or play Chopin by offering them a combined sense of touch and precise mobility that equals or even surpasses natural biological capabilities.

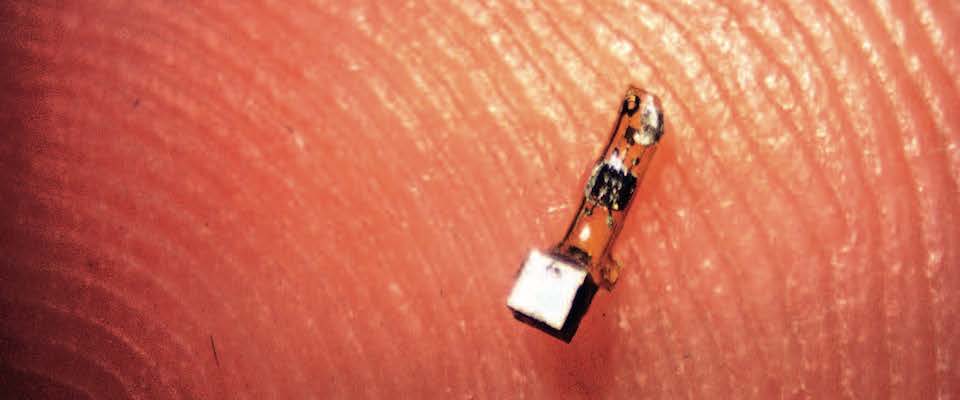

A BMI is a system that connects the brain to a machine using three main components, all of which are in rapid and exciting development at Berkeley. The first component is the neural interface, a sensor that reads the firing of neurons in the brain. It needs to be tiny, wireless, and durable enough to be surgically placed and left there for an appreciable amount of the user’s lifespan. Secondly, that information must be sent to an external decoder, basically a computer program that uses mathematical models to translate the brain’s activity into output signals. Those signals are then used to control the third element of the BMI, the “machine,” for example a prosthetic arm or leg. Or, eventually, your phone or Facebook? Yes, because, let’s be real, we’ve seen those things called movies so we know where this is headed.

In the ideal future BMI system, that final, synthetic extension of the body should also be able to read and send tactile, spatial, and sensory information back to the users’ brains so that they can respond and adjust to real-life situations in real-time, thus fully closing the BMI system’s loop. This kind of feedback would be essential for, say, searching for an object in the dark, when the user would need to feel around for it in an as-close-to-natural way as possible.

Since the beginning of BMI research, the first component, neural interfaces, have been a main “bottleneck” in the system, explains Michel Maharbiz, Carmena’s colleague, co-inventor, and friend, who is a professor of electrical engineering and computer sciences (EECS). First-generation interfaces required batteries and tethered connections with wires running in and out of the body, which a) degrade and need replacing, and b) “No one wants those in their heads for 30 years.”

“The physics of ultrasound are perfect, because our body will let pressure waves travel through it fairly well” and with much less energy than radio frequencies need.

Second-generation models tried wireless radios, but because “your body is basically a bag of saltwater, radio frequency waves don’t readily pass through it,” says Maharbiz. Sometime in 2012—Carmena says he remembers the exact moment—an excited Maharbiz called him up, saying, “Guys, guys, guys, let’s go for dinner. I have an idea I want to run by you. It’s crazy!” As he walked through the parking lot on Hearst Avenue he had a revelation: “Ultrasound!”

A year later, Maharbiz, Carmena, and EECS and neuroscience colleagues published an online paper on one of Berkeley’s most groundbreaking contributions to BMI research: a new type of neural interface they called “neural dust,” which uses ultrasound instead of radio frequencies both for power and to get data in and out of the body. Carmena and Maharbiz, in collaboration with Dongjin Seo, Jan M. Rabaey, and Elad Alon, have managed to create neural dust that is the size of a 1-millimeter cube, wireless, battery-less, and small enough to be placed in the peripheral nervous system and muscles. The tiny sensor mote is made up of a piezoelectric crystal that is pinged by external, ultrasonic vibrations. The crystal converts those vibrations into electricity, powering a transistor that is connected to a nerve or muscle fiber. An external decoder reads and translates the “echo” coming back. That echo, or change in the vibrations, is called “backscatter” and it allows the scientists to figure out the voltage.

“The physics of ultrasound are perfect,” says Maharbiz, “because our body will let pressure waves travel through it fairly well” and with much less energy than radio frequencies need. At its current size, neural dust already has potential for a variety of clinical applications, such as providing real-time monitoring of areas of the body like the peripheral nervous system and organs. Carmena says that neural dust will eventually replace wire electrodes we use today.

The longer-term challenge is to get these dust motes down to their 50-micron target size, which they would need to be for use in the brain and central nervous system. Theoretically, neural dust electrodes could be placed throughout the brain, allowing a paraplegic to control a robotic limb or computer. Unlike current implantable sensors that degrade within one to two years, these could be left in the brain for a lifetime.

The speed in which progress on BMI and neural dust technologies has been made is almost frightening. “When I came [to Berkeley] in 2005 there was no one here working on neuroengineering, neurotechnology, or BMI,” remembers Carmena. Now, with an expanded team, “We do lots of wacky stuff,” says Maharbiz. “We have a project where we are looking at how you could take microbes with flagella and marry them to chips to build 1mm swimming robots. … You sort of create your own reality, that’s one of the beauties of working at Berkeley.

“As we look forward, say 50–60 years,” says Maharbiz, “I see technology increasingly becoming a hybrid between synthetic things of the kind people normally think about—things made out of plastic and metal and chips—and organic things. The reason is that we are getting progressively better at hacking cell-based technology, or cell-based systems.”

Neither Maharbiz nor Carmena deny that the advancement of BMI technology will come with profound questions and debates surrounding neuroethics, a rapidly growing field they want to become more involved in. “I think that is where humanity is going, this blend of technology and flesh,” says Carmena.

“Do you think that will happen in our lifetime?” I ask.

“Oh yes. We need to start thinking about all of the implications of being able to read people’s minds” and even add thoughts.

Such brain-stimulating technology, he says, could change our sense of self. “It’s a very profound thing. It’s more than, ‘Oh, I can control an artificial arm to do something.’ Now we’re talking about the mind.” And it includes, he says, “the new wave of these mental prosthetics,” which, while still in the early phases of development, might eventually be used to help people with psychiatric conditions such as PTSD, anxiety, and depression.

In short, “neuroethics is going to be extremely important—but that,” he laughs, “is for another issue in the magazine.”

Marica Petrey is a filmmaker, musician, and frequent contributor to California. Find more of her work at www.madmarica.com.